opencv的使用

opencv1.0,主要由c语言编写,性能好,学习成本较高;

opencv2.0,c++,python的支持;

opencv3.0,进一步提高可用性;

opencv4.0,机器学习库的增加;

添加include库文件目录:视图->其他窗口->属性管理器->[对应项目名]->Debug|x64->Microsoft.Cpp.x64.user->右键属性->VC++目录->包含目录,添加:opencv/build/include,opencv/build/include/opencv2

添加lib动态链接库目录:库目录,添加:opencv/build/x64/vc14/lib

添加附加依赖性,lib中的*.lib文件,在调试时使用带_d的:连接器->输入->附加依赖项,添加:opencv_world410d.lib

添加opencv的dll文件到系统环境变量;

重启VS

vs版本

目录

Visual Studio 6

vc6

Visual Studio 2003

vc7

Visual Studio 2005

vc8

Visual Studio 2008

vc9

Visual Studio 2010

vc10

Visual Studio 2012

vc11

Visual Studio 2013

vc12

Visual Studio 2015

vc14

Visual Studio 2017

vc15

#include <opencv/opecv.hpp> #include <iostream> using namespace std ;using namespace cv;int main () Mat src = imread("./lena.jpg" ); if (src.empty())return -1 ; namedWindow("input" ,WINDOW_AUTOSIZE); imshow("input" ,src); waitKey(0 ); destroyAllWindows(); return 0 ; }

pip install opencv-python pip install opencv-contrib-python pip install pytessract

函数

说明

示例

imread(filename,mode)

打开图片,默认BGR彩色图像,支持灰度,以及任意格式

imshow(winName,src)

显示图片,不支持透明通道

imsave(filename,src)

根据文件名格式保存图片,支持各种格式

对于带有透明通道的图片,在imshow是无法显示的,必须通过IMEAD_UNCHANGED参数打开文件,并保存,使用图片浏览器查看。

函数

说明

示例

namedWindow(winName,mode)

窗口设置

色彩空间

RGB

非设备依赖的色彩空间

HSV

直方图效果最好

Lab

两通道

YCb Cr

cvtColor(src,hsv,COLOR_BGR2HSV); inRange(hsv,Scalar(35 ,43 ,46 ),Scalar(77 ,255 ,255 ),mask); bitwise_not(mask,mask); bitwise_and(frame,frame,dst,mask); imshow("mask" ,mask); imshow("dst" ,dst);

Mat hsv,lab; cvtColor(src,hsv,COLOR_BGR2HSV); cvtColor(src,lab,COLOR_BGR2Lab);

Mat I,img,I1,I2,dst,A,B; double k,alpha;Scalar s;

基本属性

-

cols

矩阵列数

rows

矩阵行数

channels

通道数

type

数据类型

total

矩阵总元素数

data

指向矩阵数据块的指针

多通道数据类型

-

#define CV_8U 0

#define CV_8S 1

#define CV_16U 2

#define CV_16S 3

#define CV_32S 4

#define CV_32F 5

#define CV_64F 6

#define CV_USRTYPE1 7

#define CV_MAT_DEPTH_MASK (CV_DEPTH_MAX - 1)

#define CV_MAT_DEPTH(flags) ((flags) & CV_MAT_DEPTH_MASK)

#define CV_MAKETYPE(depth,cn) (CV_MAT_DEPTH(depth) + (((cn)-1) << CV_CN_SHIFT))

#define CV_MAKE_TYPE CV_MAKETYPE

#define CV_8UC1 CV_MAKETYPE(CV_8U,1)

#define CV_8UC2 CV_MAKETYPE(CV_8U,2)

#define CV_8UC3 CV_MAKETYPE(CV_8U,3)

#define CV_8UC4 CV_MAKETYPE(CV_8U,4)

I=I1+I2; add(I1,I2,dst,mask,dtype); scaleAdd(I1,scale,I2,dst);

absdiff(I1,I2,I); A-B;A-s;s-A;-A; subtract(I1,I2,dst);

I=I.mul(I); Mat C=A.mul(5 /B); A*B;矩阵相乘 I=alpha*I; Mat::cross(Mat); double Mat::dot (Mat) mul-------multiply pow (src,double p,dst);

divide(I1,I2,dst,scale,int dtype=-1 ); A/B;alpha/A;都是点除

log10 ()exp (I,dst);log (I,dst);randu(I,Scalar::all(0 ),Scalar::all(255 )); Mat::t()转置 Mat::inv(int method=DECOMP_LU)求逆。method=DECOMP_CHOLESKY(专门用于对称,速度是LU的2 倍),DECOMP_SVD invert(I1,dst,int method=DECOMP_LU); MatExpr abs (Mat) A cmpop B ;A compop alpha;alpha cmpop A;这里cmpop表示>,>=,==,!=,<=,<等,结果是CV_8UC1的mask的0 或255 按位运算:A logicop B;A logicop s;s logicop A;~A;这里logicop代表&,|,^ bitwise_not(I,dst,mask); 还有bitwise_and,bitwise_or,bitwise_xor, min(A,B);min(A,alpha);max(A,B);max(A,alpha);都返回MatExpr,返回的dst和A的类型一样 double determinant (Mat) bool eigen (I1,dst,int lowindex=-1 ,int highindex=-1 ) bool eigen (I1,dst,I,int ...) minMaxLoc(I1,&minVal,&maxVal,Point *minLoc=0 ,Point* MaxLoc=0 ,mask);

Mat I (img,Rect(10 ,10 ,100 ,100 )) ;Mat I=img(Range:all(),Range(1 ,3 )); Mat I=img.clone(); img.copyTo(I); Mat I (2 ,2 ,CV_8UC3,Scalar(0 ,0 ,255 )) ;Mat E=Mat::eye(4 ,4 ,CV_64F); Mat O=Mat::ones(2 ,2 ,CV_32F); Mat Z=Mat::zeros(3 ,3 ,CV_8UC1); Mat C=(Mat_(2 ,2 )<<0 ,-1 ,2 ,3 ); Mat::row(i);Mat::row(j);Mat::rowRange(start,end);Mat::colRange(start,end);都只是创建个头 Mat::diag(int d);d=0 是是主对角线,d=1 是比主低的对角线,d=-1. ... static Mat Mat::diag(const Mat& matD)Mat::setTo(Scalar &s);以s初始化矩阵 Mat::push_back(Mat);在原来的Mat的最后一行后再加几行 Mat::pop_back(size_t nelems=1 );

(1 ) Mat::Mat() (2 ) Mat::Mat(int rows, int cols, int type) (3 ) Mat::Mat(Size size, int type) (4 ) Mat::Mat(int rows, int cols, int type, const Scalar& s) (5 ) Mat::Mat(Size size, int type, const Scalar& s) (6 ) Mat::Mat(const Mat& m) (7 ) Mat::Mat(int rows, int cols, int type, void * data, size_t step = AUTO_STEP) (8 ) Mat::Mat(Size size, int type, void * data, size_t step = AUTO_STEP) (9 ) Mat::Mat(const Mat& m, const Range& rowRange, const Range& colRange) (10 ) Mat::Mat(const Mat& m, const Rect& roi) (11 ) Mat::Mat(const CvMat* m, bool copyData = false ) (12 ) Mat::Mat(const IplImage* img, bool copyData = false ) (13 ) template <typename T, int n>explicit Mat::Mat (const Vec<T, n>& vec, bool copyData = true ) (14) template<typename T, int m, int n> explicit Mat::Mat(const Matx<T, m, n>& vec, bool copyData = true) (15 ) template explicit Mat::Mat (const vector & vec, bool copyData = false ) (16 ) Mat::Mat (const MatExpr& expr) (17 ) Mat::Mat (int ndims, const int * sizes, int type) (18 ) Mat::Mat (int ndims, const int * sizes, int type, const Scalar& s) (19 ) Mat::Mat (int ndims, const int * sizes, int type, void * data, const size_t * steps = 0 )

at定位符访问

指针访问

迭代器iterator访问

(1)1个通道:

for (int i=0 ;ifor (int j=0 ;jI.at(i,j)=k;

(2)3个通道:

(3)其他机制

I.rows(0 ).setTo(Scalar(0 )); saturate_cast(…); Mat::total();返回一共的元素数量 size_t Mat::elemSize () 3 *sizeof (short )–>6 size_t Mat::elemSize1 () sizeof (short )–>2 int Mat::type()返回他的类型CV_16SC3之类 int Mat::depth()返回深度:CV_16SC3–>CV_16S int Mat::channels()返回通道数 size_t Mat:step1()返回一个被elemSize1()除以过的stepSize Mat::size()返回Size(cols,rows);如果大于2维,则返回(-1,-1),都是先宽再高的 bool Mat::empty()如果没有元素返回1,即Mat::total()0或者Mat::data NULL uchar *Mat::ptr(int i=0)指向第i行 Mat::at(int i)(int i,int j)(Point pt)(int i,int j,int k) RNG随机类:next,float RNG::uniform (float a,float b) double RNG::gaussian (double sigma) RNG::fill(I,int distType,Mat low,Mat up); randu(I,low,high); randn(I,Mat mean,Mat stddev); reduce(I,dst,int dim,int reduceOp,int dtype=-1 ); setIdentity(dst,Scalar &value=Scalar(1 ));

getConvertElem,extractImageCOI,LUT magnitude(x,y,dst); meanStdDev, MulSpectrums(I1,I2,dst,flags);傅里叶 normalize(I,dst,alpha,beta,int normType=NORM_L2,int rtype=-1 ,mask); PCA,SVD,solve,transform,transpose

Point2f P (5 ,1 ) ;Point3f P3f (2 ,6 ,7 ) ;vector v;v.push_back((float )CV_PI);v.push_back(2 );v.push_back(3.01f );vector vPoints (20 )

Point p1 = { 2 ,3 }; Point p2 = Point2i(3 , 4 );

Size a = Size(5 , 6 ); cout <<"a.width=" << a.width << ":a.height=" << a.height;

Rect rect = Rect(100 , 50 , 10 , 20 ); cout << rect.area() << endl ; cout << rect.size() << endl ; cout << rect.tl() << endl ; cout << rect.br() << endl ; cout << rect.width << endl ; cout << rect.height << endl ; cout << rect.contains(Point(101 , 51 ) ) << endl ;

Mat mask=src<0 ;这样很快建立一个mask了

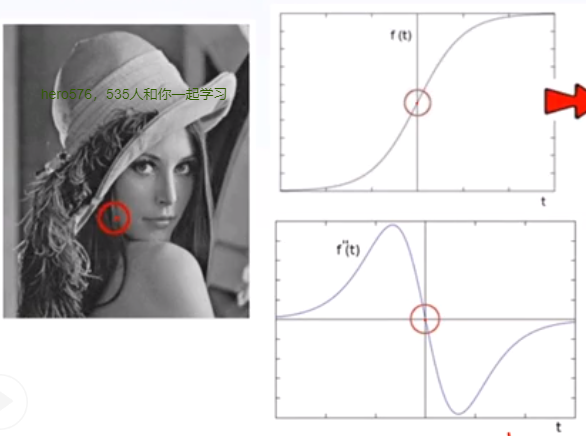

( f ∗ g ) ( n ) = ∫ ∞ − ∞ f ( τ ) g ( n − τ ) d τ (f*g)(n)=\int_{\infty }^{-\infty} f(\tau)g(n-\tau)d\tau

( f ∗ g ) ( n ) = ∫ ∞ − ∞ f ( τ ) g ( n − τ ) d τ

( f ∗ g ) ( n ) = ∑ τ = − ∞ ∞ f ( τ ) g ( n − τ ) (f*g)(n)=\sum_{\tau=-\infty }^{\infty} f(\tau)g(n-\tau)

( f ∗ g ) ( n ) = τ = − ∞ ∑ ∞ f ( τ ) g ( n − τ )

模糊

梯度

边缘

锐化

属性

说明

cols

列数,图像宽度

rows

行数,图像高度

channels()

通道数

depth()

深度

type()

图像类型枚举

图像深度

说明

CV_8U

8位无符号(字节)类型

CV_8S

8位有符号(字节)类型

CV_16U

16位无符号类型

CV_16S

16位有符号类型

CV_32S

32位有符号整型类型

CV_32F

32位浮点类型

CV_64F

32位双精度类型

通道

说明

举例

C1

单通道

CV_8UC1

C2

双通道

CV_8UC2

C3

三通道

CV_8UC3

C4

四通道

CV_8UC4

功能

代码

说明

空白Mat对象

Mat t1 = Mat(256,256,CV_8UC1);t1.Scalar(0);Mat t2 = Mat(256,256,CV_8UC3);t2.Scalar(0,0,0);Mat t3 = Mat(Size(256,256),CV_8UC1);t3.Scalar(0);Mat t4 = Mat::Zero(Size(256,256),CV_8UC1);

从现有图像创建

Mat t1 = src;//指针Mat t2 = src.clone();//拷贝Mat t3; src.copyTo(t3);Mat t4 = Mat::zero(src.size(),src.type());

创建有填充值的

scalar函数可以填充内容

创建单通道和多通道

在创建对象时设定mat的类型

for (int row=0 ;row<src.rows;row++){ for (int col=0 ;col<src.cols;col++){ if (src.channels()==3 ){ Vec3b pixel = src.at<Vec3b>(row,col); int blue=pixel[0 ],green=pixel[1 ],red=pixel[2 ]; }else if (src.channels()==1 ){ int pv=src.at<uchar>(row,col); } } }

for (int row=0 ;row<src.rows;row++){ unchar* curr_row = src.ptr<unchar>(row); for (int col=0 ;col<src.cols;col++) { if (src.channels()==3 ) { int blue = *curr_row++; int green = *curr_row++; int red = *curr_row++ ; }else if (src.channels()==1 ) { int pv = *curr_row++; } } }

OpenCV中的向量模板类,具体有Vec2b,Vec2s,Vec3b,Vec3s,Vec3i,Vec3f等

符号

定义

说明

Vec3b

typedef Vec<uchar,3> Vec3b;Vec3b就是有3个uchar类型元素的向量

Vec3s

typedef Vec<short,3> Vec3s;Vec3s就是有3个short int(短整型)类型元素的向量

在对输入图像进行加减乘除操作时,需要保证两个操作矩阵的大小、类型一致。

unchar加减乘除的越界处理。

函数

说明

示例

Mat add(src1,src2)

加

Mat subtract(src1,src2)

减

Mat multiply(src1,src2)

乘

Mat divide(src1,src2)

除

void addWeighted(src1,weight1,src2,weight2,base,dst)

两个图片分别乘以系数相加,可以通过提高系数增加对比度

Mat src1 = imread("./1.jpg" ); Mat src2 = imread("./2.jpg" ); Mat dst1 = add(src1,src2); Mat dst2 = subtract(src1,src2); Mat dst3 = multiply(src1,src2); Mat dst4 = divide(src1,src2); Mat dst5; addWeighted(src1,1.2 ,src2,0.5 ,0.0 ,dst5);

未操作包含了:与或非异或。

通常使用图片未操作的效率更高。

位操作中还可以根据mask非零区域进行局部处理,mask通道数必须是1。

函数

说明

示例

bitwise_not(src,dst,mask)

非

bitwise_and(src1,src2,dst,mask)

与

bitwise_or(src1,src2,dst,mask)

或

bitwise_xor(src1,src2,dst,mask)

异或

Mat src = imread("./1.jpg" ); Mat mask = Mat::zeros(src.size (),CV_8UC1); for (int row=0 ;row<src.rows;row++) for (int col=0 ;col<src.cols;col++) mask.at<unchar>(row,col)=255 ; Mat dst1; bitwise_not(src,dst1,Mat()); Mat dst2; bitwise_and(src,src,dst1,Mat()); Mat dst3; bitwise_or(src,src,dst1,Mat()); Mat dst4; bitwise_xor(src,src,dst1,Mat());

函数

说明

示例

void minMaxLoc(src,double &min,double &max,Point &minloc,Point &maxloc)

获取最大最小值和位置,src必须是单通道的

Scalar mean(src)

均值,返回每个通道的均值

void meanStdDev(src,meanMat,stdMat)

均值和方差

Mat src = imread("./1.jpg" ,IMREAD_GRAYSCALE); double xmin,xmax;Point xminloc,xmaxloc;minMaxLoc(src,&xmin,&xmax,&xminloc,&xmaxloc); src = imread("./1.jpg" ); Scalar s = mean(src); Mat xmean,mstd; meanStdDev(src,xmean,mstd); printf ("stddev:%2.f,%2.f,%2.f" ,mstd.at<double >(0 ,0 ),mstd.at<double >(1 ,0 ),mstd.at<double >(2 ,0 ));

Mat src = imread("./1.jpg" ,IMREAD_GRAYSCALE); vector <int >hist(256 );for (int i=0 ;i<256 ;i++){ hist[i]=0 ; } for (int row=0 ;row<src.rows;row++){ for (int col=0 ;col<src.cols;col++){ int pv = src.at<unchar>(row,col) hist[pv]++; } }

点、线、矩形、圆、椭圆、多边形、文字

当设置线宽值小于零,opencv会自动填充区域。

函数

说明

举例

line(src,Piont,Point,Scalar color,int lineWeight,int lineType)

rectangle(src,Rect,Scalar color,int lineWeight,int lineType)

circle(src,Piont,int radius,Scalar color,int lineWeight,int lineType)

ellipse(src,RotatedRect,Scalar color,int lineWeight,int lineType)

putText(src,string,Point,int fontFace,double scale,Scalar color,int lineThickness,int lineType)

Mat canvas=Mat::zeros(Size(512 ,512 ),CV_8UC3); line (canvas,Point(10 ,10 ),Point(20 ,123 ),Scalar(0 ,0 ,255 ),LINE_8);Rect rect (100 ,100 ,200 ,200 ) ;rectangle(canvas,rect ,Scalar(255 ,0 ,0 ),1 ,8 ); circle (canvas,Piont(255 ,255 ),100 ,Scalar(255 ,0 ,0 ),1 ,8 );RotatedRect rt; rt.center = Point(256 ,256 ); rt.angle = 45 ; rt.size = Size(100 ,200 ); ellipse(canvas,rt,Scalar(255 ,0 ,0 ),-1 ,8 ); putText(canvas,"hello" ,Point(100 ,50 ),FONT_HERSHEY_SIMPLEX,1.0 ,Scalar(0 ,0 ,255 ),2 ,8 ); imshow("canvas" ,canvas);

Mat canvas=Mat::zeros(Size(512 ,512 ),CV_8UC3); int x1=0 ,x2=0 ,y1=0 ,y2=0 ;RNG rng (12345 ) ;while (true ){ x1 = (int )rng.uniform(0 ,512 ); x2 = (int )rng.uniform(0 ,512 ); y1 = (int )rng.uniform(0 ,512 ); y2 = (int )rng.uniform(0 ,512 ); Scalar color = Scalar((int )rng.uniform(0 ,256 ),(int )rng.uniform(0 ,512 ),(int )rng.uniform(0 ,512 )); int lineWeight = (int )rng.uniform(1 ,20 ); line (canvas,Point(x1,y1),Point(x2,y2),color,lineWeight,LINE_8); imshow("canvas" ,canvas); char c = waitKey(10 ); if (c==27 ){ break ; } }

函数

说明

举例

split(src,Vector)

merge(Vector,dst)

vector <Mat> rgbCh (3 ) split(src, rgbCh); Mat blue=rgbCh[0 ],green=rgbCh[1 ],red=rgbCh[2 ]; Mat dst;vector <Mat> merge_src; merge_src.push_back(blue);merge_src.push_back(green);merge_src.push_back(red); merge(merge_src,dst);

Mat src = imread("./1.jpg" ,IMREAD_GRAYSCALE); if (src.empty())return -1 ;vector <Mat> rgbCh;split(src, rgbCh);rgbCh[0 ] = Scalar(0 ); bitwise_not(rgbCh[1 ],rgbCh[1 ]); Mat dst; merge(rgbCh,dst); imshow("merge" ,dst);

Mat src = imread("./1.jpg" ,IMREAD_GRAYSCALE); Rect roi;roi.x=100 ,roi.y=100 ,roi.width =200 ;roi.height =200 ; Mat dst = src(roi).clone() rectangle(src,roi,Scalar(0 ,0 ,255 ),1 ,8 ); imshow("src" ,src); imshow("dst" ,dst); waitKey(0 );destroyAllWindows();

统计与显示:对于CV_8UC1的灰度图像,像素的取值范围是0255,假使统计范围个数bin=16,每个bin的范围0 15。直方图展示的就是符合每个bin的像素个数。

函数

说明

举例

calcHist()

计算直方图

normalize(src,dst,)

归一化

Mat src = imread("./1.jpg" ,IMREAD_GRAYSCALE); if (src.empty())return -1 ;vector <Mat> rgbCh;split(src, rgbCh);Mat blue=rgbCh[0 ],green=rgbCh[1 ],red=rgbCh[2 ]; Mat hist_b,hist_g,hist_r; calcHist(&blue,1 ,0 ,Mat(),hist_b,1 ,256 ,{float []{0 ,255 }},true ,false ); calcHist(&green,1 ,0 ,Mat(),hist_g,1 ,256 ,{float []{0 ,255 }},true ,false ); calcHist(&red,1 ,0 ,Mat(),hist,hist_r,256 ,{float []{0 ,255 }},true ,false ); Mat HistShow = Mat::zeros(Size(600 ,400 ),CV_8UC3); int margin=50 ;normalize(hist_b,hist_b,0 ,HistShow-2 *margin,NORM_MINMAX,-1 ,Mat()); normalize(hist_g,hist_b,0 ,HistShow-2 *margin,NORM_MINMAX,-1 ,Mat()); normalize(hist_r,hist_b,0 ,HistShow-2 *margin,NORM_MINMAX,-1 ,Mat()); float step = (HistShow-2 *margin)/256.0 for (int i=0 ;i<255 ;i++){ float bh1 = hist_b.at<float >(i,0 ),bh2 = hist_b.at<float >(i+1 ,0 ); float gh1 = hist_b.at<float >(i,0 ),gh2 = hist_b.at<float >(i+1 ,0 ); float rh1 = hist_b.at<float >(i,0 ),rh2 = hist_b.at<float >(i+1 ,0 ); line (HistShow,Point(step *i,50 +nm-bh1),Point(step *i,50 +nm-bh2),Scalar(255 ,0 ,0 ),1 ,8 ,0 ); line (HistShow,Point(step *i,50 +nm-gh1),Point(step *i,50 +nm-gh2),Scalar(0 ,255 ,0 ),1 ,8 ,0 ); line (HistShow,Point(step *i,50 +nm-rh1),Point(step *i,50 +nm-rh2),Scalar(0 ,0 ,255 ),1 ,8 ,0 ); } imshow("HistShow" ,HistShow); waitKey(0 );

直方图均衡化后的图像会有比较好的显示效果,暗部变量,举例:64*64大小的图像,8个bin,像素总数4096

k

r k r_k r k n k n_k n k n k n \frac{n_k}{n} n n k S k = ∑ j = 0 k n j n S_k=\sum_{j=0}^{k}{\frac{n_j}{n}} S k = ∑ j = 0 k n n j p S ( s k ) p_S(s_k) p S ( s k )

0

0 7 \frac{0}{7} 7 0 790

0.19

0.19->1 7 \frac{1}{7} 7 1 s 0 s_0 s 0

0.19

1

1 7 \frac{1}{7} 7 1 1023

0.25

0.44->3 7 \frac{3}{7} 7 3 s 1 s_1 s 1

0.25

2

2 7 \frac{2}{7} 7 2 850

0.21

0.65->5 7 \frac{5}{7} 7 5 s 2 s_2 s 2

0.21

3

3 7 \frac{3}{7} 7 3 656

0.16

0.81->6 7 \frac{6}{7} 7 6 s 3 s_3 s 3

0.24

4

4 7 \frac{4}{7} 7 4 329

0.08

0.89->6 7 \frac{6}{7} 7 6 s 3 s_3 s 3

0.24

5

5 7 \frac{5}{7} 7 5 245

0.06

0.95->7 7 \frac{7}{7} 7 7 s 4 s_4 s 4

0.11

6

6 7 \frac{6}{7} 7 6 122

0.03

0.98->7 7 \frac{7}{7} 7 7 s 4 s_4 s 4

0.11

7

7 7 \frac{7}{7} 7 7 81

0.02

1.0->7 7 \frac{7}{7} 7 7 s 4 s_4 s 4

0.11

Mat src = imread("./1.jpg" ,IMREAD_GRAYSCALE),gray,dst; cvtColor(src,gray,COLOR_BGR2GRAY); imshow("gray" ,gray); equalizeHist(gray,dst); imshow("hist" ,dst);

直方图比较可以评价两幅图的直方图分布的相似性。

相关性

d ( H 1 , H 2 ) = ∑ I ( H 1 ( I ) − H ^ 1 ) ( H 2 ( I ) − H ^ 2 ) ∑ I ( H 1 ( I ) − H ^ 1 ) 2 ∑ I ( H 2 ( I ) − H ^ 2 ) 2 d(H_1,H_2)=\frac{\sum_I{(H_1(I)-\hat{H}_1)(H_2(I)-\hat{H}_2)}}{\sqrt{\sum_I{(H_1(I)-\hat{H}_1)^2}\sum_I{(H_2(I)-\hat{H}_2)^2}}}

d ( H 1 , H 2 ) = ∑ I ( H 1 ( I ) − H ^ 1 ) 2 ∑ I ( H 2 ( I ) − H ^ 2 ) 2 ∑ I ( H 1 ( I ) − H ^ 1 ) ( H 2 ( I ) − H ^ 2 )

H ^ k = 1 N ∑ J H k ( J ) \hat{H}_k=\frac{1}{N}\sum_J{H_k(J)}

H ^ k = N 1 J ∑ H k ( J )

d ( H 1 , H 2 ) = ∑ I ( H 1 ( I ) − H 2 ( I ) ) 2 H 1 ( I ) d(H_1,H_2)=\sum_I{\frac{(H_1(I)-H_2(I))^2}{H_1(I)}}

d ( H 1 , H 2 ) = I ∑ H 1 ( I ) ( H 1 ( I ) − H 2 ( I ) ) 2

d ( H 1 , H 2 ) = ∑ I m i n ( H 1 ( I ) , H 2 ( I ) ) d(H_1,H_2)=\sum_I{min(H_1(I),H_2(I))}

d ( H 1 , H 2 ) = I ∑ m i n ( H 1 ( I ) , H 2 ( I ) )

d ( H 1 , H 2 ) = 1 − 1 H 1 H 2 N 2 ∑ I H 1 ( I ) − H 2 ( I ) d(H_1,H_2)=\sqrt{1-\frac{1}{\sqrt{H_1H_2N^2}}\sum_I{\sqrt{H_1(I)-H_2(I)}}}

d ( H 1 , H 2 ) = 1 − H 1 H 2 N 2 1 I ∑ H 1 ( I ) − H 2 ( I )

Mat src1 = imread("./1.jpg" ,IMREAD_GRAYSCALE); Mat src2 = imread("./2.jpg" ,IMREAD_GRAYSCALE); int histSize[] = {256 ,256 ,256 };int channels[] = {0 ,1 ,2 };Mat hist1,hist2; float c1[] = {0 ,255 };float c2[] = {0 ,255 };float c3[] = {0 ,255 };const float *histRanges[]={c1,c2,c3};calcHist(&src1,1 ,channels,Mat(),hist1,3 ,histSize,histRanges,true ,false ); calcHist(&src2,1 ,channels,Mat(),hist2,3 ,histSize,histRanges,true ,false ); normalize(hist1,hist1,0 ,1.0 ,NORM_MINMAX,-1 ,Mat())l; normalize(hist2,hist2,0 ,1.0 ,NORM_MINMAX,-1 ,Mat())l; double h12 = compareHist(hist1,hist2,HISTCMP_BHATTACHARYYA);double h11 = compareHist(hist1,hist1,HISTCMP_BHATTACHARYYA);printf ("%.2f,%.2f" ,h12,h11)

查找表是一维或多维的数组,存储了不同输入值所对应的输出值。根据一对一或多对一的函数,定义了如何将像素转换为新的值。

函数

说明

举例

LUT(src,lut,dst)

颜色替换

applyColorMap(src,dst,COLORMAP_AUTUMIN)

自带12种颜色查找表替换

Mat src = imread("./1.jpg" ); Mat color = imread("./lut.jpg" ); Mat lut = Mar::zeros(256 ,i,CV_8U3); for (int i=0 ;i<256 ;i++){ lut.at<Vec3b>(i,0 )=color.at<Vec3b>(10 ,i); } Mat dst; LUT(src,lut,dst); imshow("color" ,color);imshow("dst" ,dst);

Mat model,src; Mat model_hsv,src_hsv; cvtColor(model,model_hsv,COLOR_BGR2HSV); cvtColor(src,src_hsv,COLOR_BGR2HSV); int h_bins=32 ,s_bin=32 ;int histSize[]={h_bins,s_bins};int channels[] = {0 ,1 };Mat roiHist; float h_range[]={0 ,180 },s_range[]={0 ,255 };const float * ranges[]={h_range,s_range};calHist(&model_hsv,1 ,channels,Mat{},roiHist,2 ,histSize(),ranges,true ,false ); normalize(roiHist,roiHist,NORM_MINMAX,-1 ,Mat()); MatND backproj; calcBackproject(&src_hsv,1 channels,roiHist,backproj,ranges,1.0 ); imshow("backproject" ,backproj);

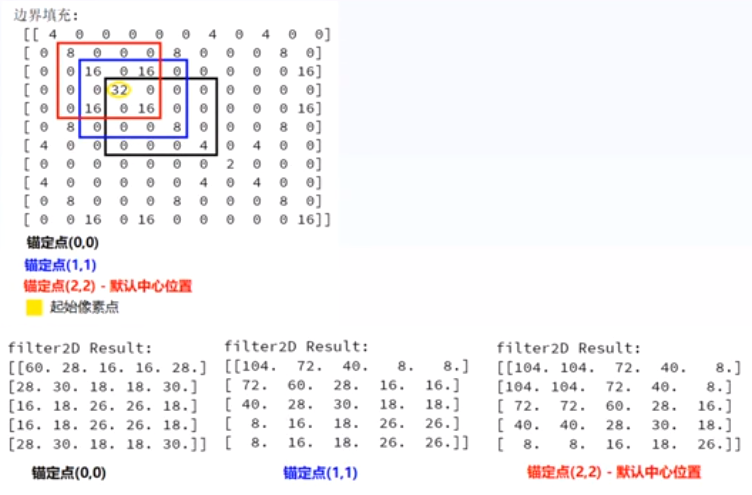

int k=15 ;Mat meankernel = Mat::ones(k,k,CV_32F)/(float )(k*k); filter2D(src,dst,-1 ,meankernel,Point(-1 ,-1 ),0 ,BORDER_DEFAULT) Mat robot = (Mat_<int >(2 ,2 )<<1 ,0 ,0 ,-1 ); filter2D(src,dst,CV_32F,robot,Point(-1 ,-1 ),127 ,BORDER_DEFAULT); convertScaleAbs(dst,dst);

Mat src = imread("./1.jpg" ); Mat dst = Mar::zeros(src.size (),src.type()); int h = src.rows,w = src.cols;for (int row=1 ;row<h-1 ;row++){ for (int col=1 ;col<w-1 ;col++){ int sb = src.at<Vec3b>(row-1 ,col-1 )[0 ] + src.at<Vec3b>(row-1 ,col)[0 ] + src.at<Vec3b>(row-1 ,col+1 )[0 ] + src.at<Vec3b>(row,col-1 )[0 ] + src.at<Vec3b>(row,col)[0 ] + src.at<Vec3b>(row,col+1 )[0 ] + src.at<Vec3b>(row+1 ,col-1 )[0 ] + src.at<Vec3b>(row+1 ,col)[0 ] + src.at<Vec3b>(row+1 ,col+1 )[0 ]; int sg = src.at<Vec3b>(row-1 ,col-1 )[1 ] + src.at<Vec3b>(row-1 ,col)[1 ] + src.at<Vec3b>(row-1 ,col+1 )[1 ] + src.at<Vec3b>(row,col-1 )[1 ] + src.at<Vec3b>(row,col)[1 ] + src.at<Vec3b>(row,col+1 )[1 ] + src.at<Vec3b>(row+1 ,col-1 )[1 ] + src.at<Vec3b>(row+1 ,col)[1 ] + src.at<Vec3b>(row+1 ,col+1 )[1 ]; int sr = src.at<Vec3b>(row-1 ,col-1 )[2 ] + src.at<Vec3b>(row-1 ,col)[2 ] + src.at<Vec3b>(row-1 ,col+1 )[2 ] + src.at<Vec3b>(row,col-1 )[2 ] + src.at<Vec3b>(row,col)[2 ] + src.at<Vec3b>(row,col+1 )[2 ] + src.at<Vec3b>(row+1 ,col-1 )[2 ] + src.at<Vec3b>(row+1 ,col)[2 ] + src.at<Vec3b>(row+1 ,col+1 )[2 ]; src.at<Vec3b>(row,col)[0 ]=sb/9 ; src.at<Vec3b>(row,col)[1 ]=sg/9 ; src.at<Vec3b>(row,col)[2 ]=sr/9 ; } } imshow("conv" ,dst);

Mat src = imread("./1.jpg" ); Mat dst; blur(src,dst,Size(3 ,3 ),Point(-1 ,-1 ),BORDER_DEFAULT); imshow("conv" ,dst);

函数

方法

BORDER_CONSTANT

0000/abcd/0000

BORDER_REPLICATE

aaaa/abcd/dddd

BORDER_WRAP

bcd/abcd/abc

BORDER_REFLECT_101

dcb/abcd/cba

BORDER_DEFAULT

dcb/abcd/cba

int border = 8 ;copyMakeBorder(src,dst,border,boder,boder,border,BORDER_DEFAULT); copyMakeBorder(src,dst,border,boder,boder,border,BORDER_CONSTANT,SCalar(0 ,0 ,255 ));

锚定位置的选择

不同锚定位置,卷积后的图像不同。选择尽量使得奇数的卷积核,尽量使得锚定点在中心位置。

一阶高斯函数:f ( x ) = e − x 2 2 δ 2 f(x)=e^{-\frac{x^2}{2\delta^2}} f ( x ) = e − 2 δ 2 x 2

二阶高斯函数:f ( x , y ) = e − x 2 + y 2 2 δ 2 f(x,y)=e^{-\frac{x^2+y^2}{2\delta^2}} f ( x , y ) = e − 2 δ 2 x 2 + y 2

使用高斯函数可以尽量使得中心点的信息保留,比均匀卷积更能保留原始图像的信息。

高斯模糊的锚定位置必须是中心位置。

代码实现

GaussianBlur(src,dst,Size(5 ,5 ),0 );

盒子模糊即均值模糊,与高斯模糊不同,边缘的破坏会随着核大小增大会越严重。

K = α [ 1 1 1 1 ] K=\alpha\begin{bmatrix} 1 & 1\\1 &1\end{bmatrix}

K = α [ 1 1 1 1 ]

α = { 1 w i d t h ⋅ h e i g h t w h e n normalize=true 1 o t h e r w i s e \alpha=\begin{cases}\frac{1}{width·height} & when \text{normalize=true} \\1 & otherwise\end{cases}

α = { w i d t h ⋅ h e i g h t 1 1 w h e n normalize=true o t h e r w i s e

boxBlur(src,dst,-1 ,Size(5 ,5 ),Point(-1 ,-1 ),true ,BORDER_DEFAULT); boxBlur(src,dst,-1 ,Size(15 ,1 ),Point(-1 ,-1 ),true ,BORDER_DEFAULT);

g ( x , y ) = f ( x , y ) − [ f ( x + 1 , y ) + f ( x − 1 , y ) + f ( x , y + 1 ) + f ( x , y − 1 ) + 4 f ( x , y ) ] g(x,y)=f(x,y)-[f(x+1,y)+f(x-1,y)+f(x,y+1)+f(x,y-1)+4f(x,y)]

g ( x , y ) = f ( x , y ) − [ f ( x + 1 , y ) + f ( x − 1 , y ) + f ( x , y + 1 ) + f ( x , y − 1 ) + 4 f ( x , y ) ]

[ 0 − 1 0 − 1 5 − 1 0 − 1 0 ] \begin{bmatrix}0 & -1 & 0\\-1 & 5 & -1\\0 & -1 & 0\end{bmatrix}

⎣ ⎡ 0 − 1 0 − 1 5 − 1 0 − 1 0 ⎦ ⎤

用原图减去二阶梯度边缘检测算子,将图像中边缘的增强加到原图中,实现锐化的效果。

Mat mylaplacian = (Mat_<int >(3 ,3 )<<0 ,-1 ,0 ,-1 ,5 ,-1 ,0 ,-1 ,0 ); Mat dst; filter2D(src,dst,CV_32F,mylaplacian,Point(-1 ,-1 ),0 ,BORDER_DEFAULT); converScaleAbs(dst,dst); imshow("sharpen filter" ,dst);

unsharp mark filter

拉普拉斯二阶卷积对于噪声非常敏感,而图像模糊则会将大的边缘变成小的边缘,将两者相减,边缘的差异就显现出来了。

s h a r p i m a g e = w 1 ⋅ b l u r − w 2 ⋅ l a p l a c i a n sharp_image=w_1·blur-w_2·laplacian

s h a r p i m a g e = w 1 ⋅ b l u r − w 2 ⋅ l a p l a c i a n

USM对大的边缘具有更好的锐化效果,图片效果更为自然。

Mat meanBlur,laplac,dst; GaussianBlur(src,meanBlur,Size(3 ,3 ),0 ); Laplacian(src,laplac,-1 ,1 ,1.0 ,BORDER_DEFAULT); addWeight(meanBlur,1.0 ,laplac,-0.7 ,0 ,dst);

噪声产生的原因:设备原因,环境原因等

噪声的分类:椒盐噪声(黑白点)、高斯噪声等

RNG rng (12345 ) ;Mat saltImg = src.clone(); int h=src.rows,w=src.cols,nums=100000 ;for (int i=0 ;i<nums;i++){ int x=rng.uniform(0 ,w),y=rng.uniform(0 ,h); if (i%2 ==1 )saltImg.at<Vec3b>(y,x)=Vec3b(255 ,255 ,255 ); else saltImg.at<Vec3b>(y,x)=Vec3b(0 ,0 ,0 ); }

Mat noise = Mat::zeros(src.size (),image .type()); randn(noise,Scalar(15 ,15 ,15 ),Scalar(30 ,30 ,30 )); Mat GaussImg; add(image ,noise,GaussImg);

原理:

对于椒盐噪声,主要是处理离群点,将偏差减小,常见方法有中值、均值。均值会对原始图像造成扰动,而中值表现更好。

对于高斯噪声,中值滤波处理后效果变得更模糊,高斯滤波处理效果也不好。比较好用的是边缘保留滤波

前面的滤波都属于线性滤波,中值滤波是一种统计滤波,常见的还有最小值滤波、最大值滤波、均值滤波

GaussianBlur(src,dst,Szie(5 ,5 ),0 );

中值滤波和高斯滤波,并没有考虑中心像素点对整个图像的贡献。锚定的像素点实际上是贡献最大的。所以中值滤波后的图像都被模糊掉了。高斯滤波没有考虑中心像素点和周围像素差值很大的时候,可能是边缘和梯度的值,应该减少中心像素点的权重。

双边滤波根据每个位置的邻域,构建不同的权重模板。简单定义:I:输入图像;w:权重;O:输出:

O ( s 0 ) = 1 K ∑ N W ( s , s 0 ) ⋅ I ( s ) O(s_0)=\frac{1}{K}\sum_N{W(s,s_0)·I(s)}

O ( s 0 ) = K 1 N ∑ W ( s , s 0 ) ⋅ I ( s )

权重有高斯核函数生成,组成为w = w s + w c w=w_s+w_c w = w s + w c

s p a t i a l s p a c e : w s = exp ( − ( s − s 0 ) 2 2 δ s 2 ) spatial space: w_s=\exp(\frac{-(s-s_0)^2}{2\delta_s^2})

s p a t i a l s p a c e : w s = exp ( 2 δ s 2 − ( s − s 0 ) 2 )

c o l o r r a n g e : w c = exp ( − ( I ( s ) − I ( s 0 ) ) 2 2 δ c 2 ) color range: w_c=\exp(\frac{-(I(s)-I(s_0))^2}{2\delta_c^2})

c o l o r r a n g e : w c = exp ( 2 δ c 2 − ( I ( s ) − I ( s 0 ) ) 2 )

bilateralFilter(src,dst,0 ,100 ,10 );

高斯双边模糊,可以很好的去除图像中的噪声,经常用作于照片的磨皮。

相似像素块,权重比较大,不相似的权重比较小。搜索窗口为l*l的,i是3*3像素的模板,在窗口中进行均值计算。越相似权重越大,越不想死权重越小。最后将权重归一化。

w ( p , q 1 ) , w ( p , q 2 ) , w ( p , q 3 ) w_{(p,q_1)},w_{(p,q_2)},w_{(p,q_3)} w ( p , q 1 ) , w ( p , q 2 ) , w ( p , q 3 )

x N L M ( i ) = 1 ∑ j ∈ W i w ( i , j ) ∑ j ∈ W i w ( i , j ) x ( j ) x_{NLM}(i)=\frac{1}{\sum_{j\in W_i}{w(i,j)}}\sum_{j\in W_i}{w(i,j)x(j)}

x N L M ( i ) = ∑ j ∈ W i w ( i , j ) 1 j ∈ W i ∑ w ( i , j ) x ( j )

w ( i , j ) = e x p ( − ∑ k [ x ( l j k ) − x ( l i k ) ] 2 h 2 ) w(i,j)=exp{(-\sum_{k}{\frac{[x(l^k_j)-x(l^k_i)]^2}{h^2}})}

w ( i , j ) = e x p ( − k ∑ h 2 [ x ( l j k ) − x ( l i k ) ] 2 )

fastNIMeansDenoisingColored(src,dst,15 ,15 ,7 ,21 ); cvtColor(src,gray,COLOR_BGR2GRAY); fastNIMeansDenoising(gray,dst2);

这个方法非常慢,但是对于高斯模糊后的图片处理非常好。

灰度图像和二值图像:都是单通道,但是灰度图像取值范围是0~255,二值图像只有0和255。

|方法||

Mat gray,binary; cvtColor(src,gray,COLOR_BGR2GRAY); imshow("gray" ,gray); threshold(gray,binary,127 ,255 ,THRESH_BINARY); imshow("threshold" binary);

对图像进行二值化操作,最重要的就是计算阈值。前面使用一个自己定义的阈值,并不准确。

全局阈值分割是一种自动查找阈值的方法

m = ∑ i = 0 h ∑ j = 0 w p ( i , j ) w ⋅ h m=\frac{\sum_{i=0}^h\sum_{j=0}^w{p(i,j)}}{w·h}

m = w ⋅ h ∑ i = 0 h ∑ j = 0 w p ( i , j )

p ( i , j ) = { 255 > = m 0 < m p(i,j)=\begin{cases} 255&>=m \\0&<m\end{cases}

p ( i , j ) = { 2 5 5 0 > = m < m

cvtColor(src,gray,COLOR_BGR2GRAY); Scalar m = mean(gray); threshold(gray,binary,m[0 ],255 ,THRESH_BINARY);

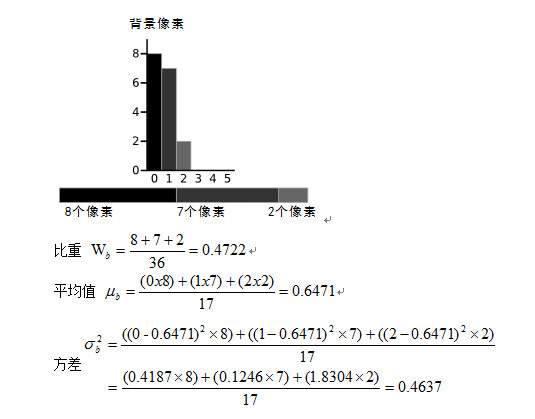

均值并不能真是反应图像的分布信息,使用直方图可以解决这个缺点

大津法原理就是找到一个分隔,使得类内之间差距比较小,类类之间差距比较大,这样就可以分布不同的类别

将原图直方图进行划分为2类,前景和背景,这里选择2作为分隔,

分别求取前景和背景的比重、平均值、方差

/ t h e t a w 2 = w b ⋅ / t h e t a b 2 + w f ⋅ / t h e t a f 2 /theta^2_w=w_b·/theta_b^2+w_f·/theta_f^2

/ t h e t a w 2 = w b ⋅ / t h e t a b 2 + w f ⋅ / t h e t a f 2

再选择其他作为分隔,找到最小方差的域作为分隔点

代码实现

int t = threshold(gray,binary,0 ,255 ,THRESH_BINARY|THRESH_OTSU);

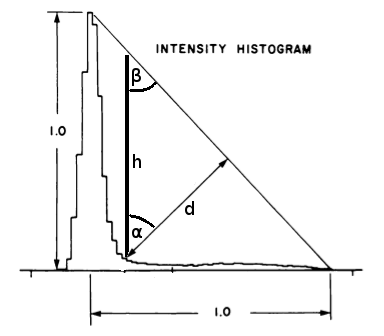

假设直方图效果是一个单脉冲的结构,那么做极值点到终点的辅助线就形成了一个三角,找到最长的d对应的h,保证/ a l p h a /alpha / a l p h a / b e t a /beta / b e t a

通常接近峰值的T效果并不高,通常是乘以1.2的系数,作为最终的T

h 2 = d 2 + d 2 − − > d = h 2 2 − − > d = s i n ( 0.7854 ) ∗ h h^2=d^2+d^2-->d=\sqrt{\frac{h^2}{2}}-->d=sin(0.7854)*h

h 2 = d 2 + d 2 − − > d = 2 h 2 − − > d = s i n ( 0 . 7 8 5 4 ) ∗ h

int t = threshold(gray,binary,0 ,255 ,THRESH_BINARY|THRESH_TRIANGLE);

缺点:仅对单峰效果比较好。常用于医疗图像的阈值分隔,这类图片的峰值单一。

adaptiveThreshold(gray,binary,255 ,ADAPTIVE_THRESH_MEAN_C,THRESH_BINARY,25 ,10 );

adaptiveThreshold(gray,binary,255 ,ADAPTIVE_THRESH_GAUSSIAN_C,THRESH_BINARY,25 ,10 );

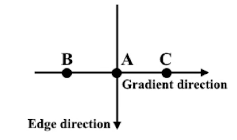

边缘法线:单位向量在该方向图像像素强度变化最大

边缘方向:与边缘法线垂直

边缘位置或中心:图像边缘所在位置

边缘强度:沿法线方向的图像局部对比越大,越是边缘

边缘的种类

跃迁类型

屋脊类型

提取方法

高斯模糊

基于梯度:都是基于阈值

Robot算子

Sobel算子

Prewitt算子

Canny

G x = [ 1 0 0 − 1 ] G_x=\begin{bmatrix}1 & 0 \\ 0 & -1\end{bmatrix}

G x = [ 1 0 0 − 1 ]

G y = [ 0 1 0 1 ] G_y=\begin{bmatrix}0 & 1\\0 & 1\end{bmatrix}

G y = [ 0 0 1 1 ]

∣ G ∣ = ∣ G x ∣ + ∣ G y ∣ |G|=|G_x|+|G_y|

∣ G ∣ = ∣ G x ∣ + ∣ G y ∣

∣ G ∣ = G x 2 + G y 2 |G|=\sqrt{G_x^2+G_y^2}

∣ G ∣ = G x 2 + G y 2

robot算子可以检测出比较明显的边缘,如果过图像的边缘清晰,可以使用robot算子。

Mat robot_x = (Mat_<int >(2 ,2 )<<1 ,0 ,0 ,-1 ); Mat robot_y = (Mat_<int >(2 ,2 )<<0 ,1 ,-1 ,0 ); Mat grad_x,grad_y; filter2D(src,grad_x,CV_32F,robot_x,Point(-1 ,-1 ),0 ,BORDER_DEFAULT); filter2D(src,grad_y,CV_32F,robot_y,Point(-1 ,-1 ),0 ,BORDER_DEFAULT); convertScaleAbs(grad_x,grad_x); convertScaleAbs(grad_y,grad_y); Mat dst; add(grad_x,grad_y,dst);

G x = [ − 1 0 1 − 1 0 1 − 1 0 1 ] G_x=\begin{bmatrix}-1 & 0 & 1\\-1 & 0 & 1\\-1 & 0 & 1\end{bmatrix}

G x = ⎣ ⎡ − 1 − 1 − 1 0 0 0 1 1 1 ⎦ ⎤

G y = [ 1 1 1 0 0 0 − 1 − 1 − 1 ] G_y=\begin{bmatrix}1 & 1 & 1\\0 & 0 & 0\\-1 & -1 & -1\end{bmatrix}

G y = ⎣ ⎡ 1 0 − 1 1 0 − 1 1 0 − 1 ⎦ ⎤

G x = [ − 1 0 1 − 2 0 2 − 1 0 1 ] G_x=\begin{bmatrix}-1 & 0 & 1\\-2 & 0 & 2\\-1 & 0 & 1\end{bmatrix}

G x = ⎣ ⎡ − 1 − 2 − 1 0 0 0 1 2 1 ⎦ ⎤

G y = [ − 1 − 2 − 1 0 0 0 1 2 1 ] G_y=\begin{bmatrix}-1 & -2 & -1\\0 & 0 & 0\\1 & 2 & 1\end{bmatrix}

G y = ⎣ ⎡ − 1 0 1 − 2 0 2 − 1 0 1 ⎦ ⎤

Sobel算子边缘描述能力比robot算子好一些。

Mat grad_x,grad_y; Sobel(src,grad_x,CV_32F,1 ,0 ); Sobel(src,grad_y,CV_32F,0 ,1 ); convertScaleAbs(grad_x,grad_x); convertScaleAbs(grad_y,grad_y); Mat dst; addWeighted(grad_x,0.5 ,grad_y,0.5 ,0 ,dst);

G x = [ − 3 0 3 − 10 0 10 − 3 0 3 ] G_x=\begin{bmatrix}-3 & 0 & 3\\-10 & 0 & 10\\-3 & 0 & 3\end{bmatrix}

G x = ⎣ ⎡ − 3 − 1 0 − 3 0 0 0 3 1 0 3 ⎦ ⎤

G y = [ − 3 − 10 − 3 0 0 0 3 10 3 ] G_y=\begin{bmatrix}-3 & -10 & -3\\0 & 0 & 0\\3 & 10 & 3\end{bmatrix}

G y = ⎣ ⎡ − 3 0 3 − 1 0 0 1 0 − 3 0 3 ⎦ ⎤

Scharr算子对于图像边缘有极强的检测能力,如果图像边缘不容易获取,可以使用Scharr。

Mat grad_x,grad_y; Scharr(src,grad_x,CV_32F,1 ,0 ); Scharr(src,grad_y,CV_32F,0 ,1 ); convertScaleAbs(grad_x,grad_x); convertScaleAbs(grad_y,grad_y); Mat dst; addWeighted(grad_x,0.5 ,grad_y,0.5 ,0 ,dst);

整合了很多基础算法,得到很好的边缘检测效果

使用高低阈值,减少单一阈值造成图像边缘检测的不连续。

模糊去噪声

提取梯度与方向

使用sobel算子,计算x,y方向的值,获取L2梯度

非最大信号抑制

在前面获得的梯度方向,判断该像素与方向两侧相比,是否是最大,最大保留。否则丢弃

高低阈值链接

也称为滞后阈值,设高阈值T1、低阈值T2,其中T1/T2=2~3

策略:大于高阈值全部保留,小于低阈值全部丢弃,之间的可以连接到高阈值像素点的保留

int t1=50 ;Mat src; void cannyChange (int ,void *) Mat edges,dst; Canny(src,edges,t1,t1*3 ,3 ,false ); imshow("edge" ,edges); } imshow("input" ,src); createTrackbar("threshold value:" ,"input" ,&t1,100 ,cannyChange); cannyChange(0 ,0 );

[ 0 1 0 1 − 4 1 0 1 0 ] \begin{bmatrix}0 & 1 & 0\\1 & -4 & 1\\0 & 1 & 0\end{bmatrix}

⎣ ⎡ 0 1 0 1 − 4 1 0 1 0 ⎦ ⎤

[ 1 1 1 1 − 8 1 1 1 1 ] \begin{bmatrix}1 & 1 & 1\\1 & -8 & 1\\1 & 1 & 1\end{bmatrix}

⎣ ⎡ 1 1 1 1 − 8 1 1 1 1 ⎦ ⎤

[ − 1 2 − 1 2 − 4 2 − 1 2 − 1 ] \begin{bmatrix}-1 & 2 & -1\\2 & -4 & 2\\-1 & 2 & -1\end{bmatrix}

⎣ ⎡ − 1 2 − 1 2 − 4 2 − 1 2 − 1 ⎦ ⎤

缺点:对于细小变化比较大的情况,以及噪声特别敏感。

Laplacian(src,dst,-1 ,3 ,1.0 ,BORDER_DEFAULT);

根据图像的像素点,在相互垂直的x或者y方向上,如果有较大的梯度,则这个点就是角点。

数学描述:

M = [ ∑ S ( p ) ( d I d x ) 2 ∑ S ( p ) d I d x d I d y ∑ S ( p ) d I d x d I d y ∑ S ( p ) ( d I d x ) 2 ] M=\begin{bmatrix} \sum_{S(p)}{(\frac{dI}{dx})^2} &\sum_{S(p)}{\frac{dI}{dx}\frac{dI}{dy}} \\ \sum_{S(p)}{\frac{dI}{dx}\frac{dI}{dy}} &\sum_{S(p)}{(\frac{dI}{dx})^2}\end{bmatrix}

M = [ ∑ S ( p ) ( d x d I ) 2 ∑ S ( p ) d x d I d y d I ∑ S ( p ) d x d I d y d I ∑ S ( p ) ( d x d I ) 2 ]

计算xy方向的偏导数:Ixy、Ixx、Iyx、Iyy,计算相应最大的梯度

求取梯度:

E ( u , v ) = ∑ x , y w ( x , y ) [ I ( x + u , y + v ) − I ( x , y ) ] 2 E(u,v)=\sum_{x,y}{w(x,y)[I(x+u,y+v)-I(x,y)]^2}

E ( u , v ) = x , y ∑ w ( x , y ) [ I ( x + u , y + v ) − I ( x , y ) ] 2

其中:w ( x , y ) w(x,y) w ( x , y ) I ( x + u , y + v ) − I ( x , y ) I(x+u,y+v)-I(x,y) I ( x + u , y + v ) − I ( x , y )

E ( u , v ) ≅ [ u , v ] M [ u v ] E(u,v)\cong[u,v]\;M\;\begin{bmatrix}u\\v\end{bmatrix}

E ( u , v ) ≅ [ u , v ] M [ u v ]

M = ∑ x , y w ( x , y ) [ I x 2 I x I y I x I y I y 2 ] M=\sum_{x,y}{w(x,y)\begin{bmatrix}I_x^2 &I_xI_y \\I_xI_y &I_y^2\end{bmatrix}}

M = x , y ∑ w ( x , y ) [ I x 2 I x I y I x I y I y 2 ]

求取R值越大,响应值越大。矩阵有两个变换:特征向量的变换,奇异值变换

R = det M − k ( t r a c e M ) 2 R=\det{M}-k(trace\;M)^2

R = det M − k ( t r a c e M ) 2

det M = λ 1 λ 2 \det{M}=\lambda_1\lambda_2

det M = λ 1 λ 2

t r a c e M = λ 1 + λ 2 trace\;M=\lambda_1+\lambda_2

t r a c e M = λ 1 + λ 2

det求取矩阵的特征值λ<sub>1<\sub>λ<sub>2<\sub>

在边缘区域上,λ<sub>1<\sub>λ<sub>2<\sub>其中一个很大,在平坦区域,λ<sub>1<\sub>λ<sub>2<\sub>都很小,只有在角点区域才使得R很大

k值是用于调节R的大小的,一般k=0.04~0.06

int blocksize=2 ,ksize=3 ;double k=0.04 ;cornerHarris(gray,dst,blocksize,ksize,k); Mat dst_norm = Mat::zeros(dst.size ,dst.type()); normalize(dst,dst_norm,0 ,255 ,NORM_MINMAX,-1 ,Mat()); convertScaleAbs(dst_norm,dst_norm); for (int row=0 ;row<src.rows;row++){ for (int col=0 ;col<src.cols;col++){ int rsp=dst_norm.at<uchar>(row,col); if (rsp>150 ){ circle (src,Point(col,row),5 ,Scalar(0 ,255 ,0 ),2 ,8 ,0 ); } } }

shi-tomas优化了R的求取方法,仅计算λ<sub>1<\sub>λ<sub>2<\sub>最小的值,假如超过了阈值,那么λ<sub>1<\sub>λ<sub>2<\sub>就都很大。简化了R值的计算

R=min(\lambda_1,\lambda_2$$)

vector <Point2f>corners;double quality_level=0.01 ;goodFeaturesToTrack(gray,corners,200 ,quality_level,3 ,Mat(),3 ,false ); for (int i=0 ;i<corners.size ();i++){ circle (src,corners[i],5 ,Scalar(0 ,255 ,0 ),2 ,8 ,0 ); }

GaussianBlur(src,src,Size(3 ,3 ),0 ); Mat gray,binary; cvtColor(src,gray,COLOR_BGR2GRAY); threshold(gray,binary,0 ,255 ,THRESH_BINARY|THRESH_OTSH); Mat label=Mat::zeros(binary.size (),CV_32S); int num_label = connectedComponents(binary,label,8 ,CV_32S,CCL_DEFAULT);vector <Vec3b>colorTable(num_label);colorTable[0 ] = Vec3b(0 ,0 ,0 ); for (int i=1 ;i<num_label;i++){ colorTable[i] = Vec3b(rng.uniform(0 ,256 ),rng.uniform(0 ,256 ),rng.uniform(0 ,256 )); } Mat dst = Mat::zeros(src.size (),CV_8UC3); int h=dst.rows,w=dst.cols;for (int row=0 ;row<h;row++){ for (int col=0 ;col<w;col++){ int label_index = label.at<int >(row,col); dst.at<Vec3b>(row,col) = colorTable(label_index); } } putText(dst,format("number:%d" ,num_label),Point(50 ,50 ),FONT_HERSHEY_PLAIN,1.0 ,Scalar(0 ,255 ,0 ),1 );

Mat stats,centroids; int num_label = connectedComponentsWithStats(binary,label,stats,centroids,8 ,CV_32S,CCL_DEFAULT);for (int i=1 ;i<num_label;i++){ int cx = centroids.at<double >(i,0 ); int cy = centroids.at<double >(i,1 ); int x = stats.at<int >(i,CC_STAT_LEFT); int y = stats.at<int >(i,CC_STAT_TOP); int w = stats.at<int >(i,CC_STAT_WIDTH); int h = stats.at<int >(i,CC_STAT_HEIGHT); int area = stats.at<int >(i,CC_STAT_AREA); circle (dst,Point(cx,cy),3 ,Scalar(255 ,0 ,0 ),2 ,8 ,0 ); Rect box (x,y,w,h) ; rectangle(dst,box,Scalar(0 ,255 ,0 ),2 ,8 ); putText(dst,format("number:%d" ,num_label),Point(x,y),FONT_HERSHEY_PLAIN,1.0 ,Scalar(0 ,255 ,0 ),1 ); putText(dst,format("number:%d" ,area),Point(x,y),FONT_HERSHEY_PLAIN,1.0 ,Scalar(0 ,255 ,0 ),1 ); }

findContours(binary,mode,method,contours)

发现轮廓的树形结构,最外层边界依次向里

drawContours(src,mode,index,color,thickness,linetype)

绘制轮廓

vector <vector <Point>>contours;vector <Vec4i>hierarchy;findContours(binary,contours,hierarchy,RET_TREE,CHAIN_APPROX_SIMPLE,Point()); drawContours(src,contours,-1 ,Scalar(0 ,0 ,255 ),2 ,8 ); imshow("contour" ,src);

∬ D ∂ Q ∂ x − ∂ P ∂ y = ∫ P d x + Q d y \iint\limits_{D}{\frac{\partial Q}{\partial x}- \frac{\partial P}{\partial y}}=\int{Pdx+Qdy}

D ∬ ∂ x ∂ Q − ∂ y ∂ P = ∫ P d x + Q d y

A = ∑ k = 0 n ( x k + 1 + x k ) ( y k + 1 − y k ) 2 A=\sum^{n}_{k=0}{\frac{(x_{k+1}+x_k)(y_{k+1}-y_k)}{2}}

A = k = 0 ∑ n 2 ( x k + 1 + x k ) ( y k + 1 − y k )

for (size_t t=0 ;t<contours.size ();t++){ double area = contourArea(contours[t]); double leng = arcLength(contours[t],true ); if (area<20 ||len<10 )continue ; Rect box = boundingRect(contours[t]); rectangle(src,box,)Scalar(0 ,0 ,255 ),2 ,8 ,0 ); RotatedRect rrt = minAreaRect(contours[t]); ellipse(src,rrt,Scalar(255 ,0 ,0 ),2 ,8 ); Point2f pts[4 ]; rrt.points(pts); for (int i=0 ;i<4 ;i++){ line (src,pts[i],pts[(i+1 )%4 ],Scalar(0 ,255 ,0 ),2 ,8 ); } printf ("angle:%.2f\n" ,rrt.angle); drawContours(src,contours,t,Scalar(0 ,0 ,255 ),-1 ,8 ); }

一阶矩:p+q=1

二阶矩:p+q=2

n阶距:p+q=n

m p , q = ∑ x = 0 M − 1 ∑ y = 0 N − 1 f ( x , y ) x p y q m_{p,q}=\sum_{x=0}^{M-1}\sum_{y=0}^{N-1}{f(x,y)x^py^q}

m p , q = x = 0 ∑ M − 1 y = 0 ∑ N − 1 f ( x , y ) x p y q

输入&f(x,y)&是二值图像,只有0,1两种结果

m 1 , 0 m_{1,0} m 1 , 0 m 0 , 1 m_{0,1} m 0 , 1 m 0 , 0 m_{0,0} m 0 , 0

x a v g = m 1 , 0 m 0 , 0 x_{avg}=\frac{m_{1,0}}{m_{0,0}} x a v g = m 0 , 0 m 1 , 0 y a v g = m 0 , 1 m 0 , 0 y_{avg}=\frac{m_{0,1}}{m_{0,0}} y a v g = m 0 , 0 m 0 , 1

中心距的表示方法:

μ p , q = ∑ x = 0 M − 1 ∑ y = 0 N − 1 f ( x , y ) ( x − x a v g ) p ( y − y a v g ) q \mu_{p,q}=\sum_{x=0}^{M-1}\sum_{y=0}^{N-1}{f(x,y)(x-x_{avg})^p(y-y_{avg})^q}

μ p , q = x = 0 ∑ M − 1 y = 0 ∑ N − 1 f ( x , y ) ( x − x a v g ) p ( y − y a v g ) q

η p , q = μ p , q m 00 p + q 2 + 1 \eta_{p,q}=\frac{\mu_{p,q}}{m_{00}^{\frac{p+q}{2}+1}}

η p , q = m 0 0 2 p + q + 1 μ p , q

ϕ 1 = η 20 + η 02 \phi_1 = \eta_{20}+ \eta_{02}

ϕ 1 = η 2 0 + η 0 2

phi_2 = (\eta_{20}- \eta_{02})^2+4\eta_{11}$

$$phi_3 = (\eta_{30}- 3\eta_{12})^2+(3\eta_{21}- \mu_{03})^2

p h i 4 = ( η 30 + η 12 ) 2 + ( η 21 + μ 03 ) 2 phi_4 = (\eta_{30}+\eta_{12})^2+(\eta_{21}+ \mu_{03})^2

p h i 4 = ( η 3 0 + η 1 2 ) 2 + ( η 2 1 + μ 0 3 ) 2

p h i 5 = ( η 30 − 3 η 12 ) ( η 30 + η 12 ) [ ( η 30 + η 12 ) 2 − 3 ( η 21 − 3 η 03 ) 2 ] + ( 3 η 21 − η 03 ) ( η 21 + η 03 ) [ 3 ( η 30 + η 12 ) 2 − ( η 21 + η 03 ) 2 ] phi_5 = (\eta_{30}-3\eta_{12})(\eta_{30}+\eta_{12})[(\eta_{30}+\eta_{12})^2-3(\eta_{21}-3\eta_{03})^2] +(3\eta_{21}-\eta_{03})(\eta_{21}+\eta_{03})[3(\eta_{30}+\eta_{12})^2-(\eta_{21}+\eta_{03})^2]

p h i 5 = ( η 3 0 − 3 η 1 2 ) ( η 3 0 + η 1 2 ) [ ( η 3 0 + η 1 2 ) 2 − 3 ( η 2 1 − 3 η 0 3 ) 2 ] + ( 3 η 2 1 − η 0 3 ) ( η 2 1 + η 0 3 ) [ 3 ( η 3 0 + η 1 2 ) 2 − ( η 2 1 + η 0 3 ) 2 ]

p h i 6 = ( η 20 − η 02 ) [ ( η 30 + η 12 ) 2 − ( η 21 + η 03 ) 2 ] + 4 η 11 ( η 30 + η 12 ) ( η 21 + η 03 ) phi_6 = (\eta_{20}-\eta_{02})[(\eta_{30}+\eta_{12})^2-(\eta_{21}+\eta_{03})^2] +4\eta_{11}(\eta_{30}+\eta_{12})(\eta_{21}+\eta_{03})

p h i 6 = ( η 2 0 − η 0 2 ) [ ( η 3 0 + η 1 2 ) 2 − ( η 2 1 + η 0 3 ) 2 ] + 4 η 1 1 ( η 3 0 + η 1 2 ) ( η 2 1 + η 0 3 )

p h i 7 = ( 3 η 21 − η 03 ) ( η 30 + η 12 ) [ ( η 30 + η 12 ) 2 − 3 ( η 21 + η 03 ) 2 ] + ( η 30 − 3 η 12 ) ( η 21 + η 03 ) [ 3 ( η 30 + η 12 ) 2 − ( η 21 + η 03 ) 2 ] phi_7 = (3\eta_{21}-\eta_{03})(\eta_{30}+\eta_{12})[(\eta_{30}+\eta_{12})^2-3(\eta_{21}+\eta_{03})^2] +(\eta_{30}-3\eta_{12})(\eta_{21}+\eta_{03})[3(\eta_{30}+\eta_{12})^2-(\eta_{21}+\eta_{03})^2]

p h i 7 = ( 3 η 2 1 − η 0 3 ) ( η 3 0 + η 1 2 ) [ ( η 3 0 + η 1 2 ) 2 − 3 ( η 2 1 + η 0 3 ) 2 ] + ( η 3 0 − 3 η 1 2 ) ( η 2 1 + η 0 3 ) [ 3 ( η 3 0 + η 1 2 ) 2 − ( η 2 1 + η 0 3 ) 2 ]

m i A = s i g n ( h i A ) ⋅ log h i A m_i^A=sign(h_i^A)·\log{h_i^A}

m i A = s i g n ( h i A ) ⋅ log h i A

m i B = s i g n ( h i B ) ⋅ log h i B m_i^B=sign(h_i^B)·\log{h_i^B}

m i B = s i g n ( h i B ) ⋅ log h i B

vector <vector <Point>> contours1,contours2;Moments mm2=moments(contours2[0 ]); Mat h2; HuMoments(mm2,hu2); for (size_t t=0 ;t<contours1.size ();t++){ Moments mm = moments(contours1[t]); Mat hu; HuMoments(mm,hu); double dist = matchShapes(hu,hu2,CONTOURS_MATCH_I1,0 ); if (dist<1 ){ drawContours(src1,contours1,t,Scalar(0 ,0 ,255 ),2 ,8 ); } double cx=mm.mm10/mm.mm00; double cy=mm.mm01/mm.mm00; circle (src1,Point(cx,cy),3 ,Scalar(255 ,0 ,0 ),2 ,8 ,0 ); }

for (size_t t=0 ;t<contours1.size ();t++){ Mat res; approxPolyDP(contours[t],result,4 ,true ); prinrf("corners:%d,columns:%d\n" ,res.rows,res.cols); }

for (size_t t=0 ;t<contours1.size ();t++){ RotatedRect rrt=fitElipse(contours[t]); float w=rrt.size .width ,h=rrt.size .height ; Porint center=rrt.center; circle (src,center,3 ,Scalar(255 ,0 ,0 ),2 ,8 ,0 ); elipse(src,rrt,Scalar(0 ,255 ,0 ),2 ,8 ); }

直线在平面坐标有两个参数决定(截距b,斜率k):y = ( − cos θ sin θ ) x + ( r sin θ ) y=(-\frac{\cos{\theta}}{\sin{\theta}})x+(\frac{r}{\sin{\theta}}) y = ( − sin θ cos θ ) x + ( sin θ r )

在极坐标空间两个参数决定(半径r,角度θ):r = x cos θ sin θ r=x\cos{\theta}\sin{\theta} r = x cos θ sin θ

霍夫检测的原理就是利用极坐标方程,如果边缘上的点在一条直线上,尝试使用不同的θ值,获取对应r值,那么他们肯定相交于一点。利用这个点就可以得到直线方程。

针对二值图像

HoughLines

霍夫直线,极坐标空间参数vector(ρ,θ)

HoughLinesP

霍夫直线,所有可能的线段和直线

vector <Vec3f>lines;HoughLines(binary,lines,1 ,CV_PI/180 ,100 ,0 ,0 ) Point pt1,pt2; for (size_t i=0 ;i<lines.size ();i++){ float rho = lines[i][0 ]; float theta = lines[i][1 ]; float acc = lines[i][2 ]; double a=cos (theta); double b=sin (theta); double x0=a*rho,y0=b*rho; pt1.x=cvRound(x0+1000 *(-b)); pt1.y=cvRound(y0+1000 *(a)); pt2.x=cvRound(x0-1000 *(-b)); pt2.y=cvRound(x0-1000 *(a)); if (rho>0 ){ line (src,pt1,pt2,Scalar(0 ,0 ,255 ),2 ,8 ,0 ); }else { line (src,pt1,pt2,Scalar(0 ,255 ,255 ),2 ,8 ,0 ); } }

Mat src=imread("1.jpg" ),binary; Canny(src,binary,80 ,160 ,3 ,false ); vector <Vec4i> lines;HoughLinesP(binary,lines,1 ,CV_PI/180 ,100 ,30 ,10 ); Mat dst=Mat::zeros(src.size (),src.type()); for (int i=0 ;i<line .size ();i++){ line (dst,Point(line [i][0 ],line [i][1 ]),Point(line [i][2 ],line [i][3 ]),Scalar(0 ,0 ,255 ),2 ,8 ); } imshow("hough line p" ,dst);

圆在平面坐标由三个参数决定( x 0 , y 0 , r ) (x_0,y_0,r) ( x 0 , y 0 , r )

参数方程描述:

x = x 0 + r cos θ x=x_0+r\cos{\theta}

x = x 0 + r cos θ

y = y 0 + r sin θ y=y_0+r\sin{\theta}

y = y 0 + r sin θ

霍夫圆检测的原理是,在同一个圆线上的三个点,同时做半径为r的圆,如果相交于三点内的一个点,证明三点共圆,并且交点就是中心点。

霍夫圆检测的时候,不会遍历所有的半径,需要指定

候选节点会基于梯度寻找,目的为了减少未知参数

Mat src=imread("1.jpg" ),gray; cvtColor(src,gray,COLOR_BGR2GRAY); GaussianBlur(gray,gray,Size(9 ,9 ),2 ,2 ); vector <Vec3f>circles;int minDist=10 ;double minRadius=20 ,maxRadius=100 ;HoughCircles(gray,circles,HOUGH_GRADIENT,2 ,minDist,100 ,100 ,minRadius,maxRadius); for (size_t t=0 ;t<>circles.size ();t++){ Point center (circles[t][0 ],circles[t][1 ]) ; int radius=round(circles[t][2 ]); cricle(src,center,radius,Scalar(0 ,0 ,255 ),2 ,8 ,0 ); cricle(src,center,2 ,Scalar(0 ,255 ,255 ),2 ,8 ,0 ); } imshow("input" ,src);

霍夫检测是对噪声很敏感的检测方法,图像尽量首先进行高斯模糊进行降噪。还有很多参数属于经验参数,需要多次调整。

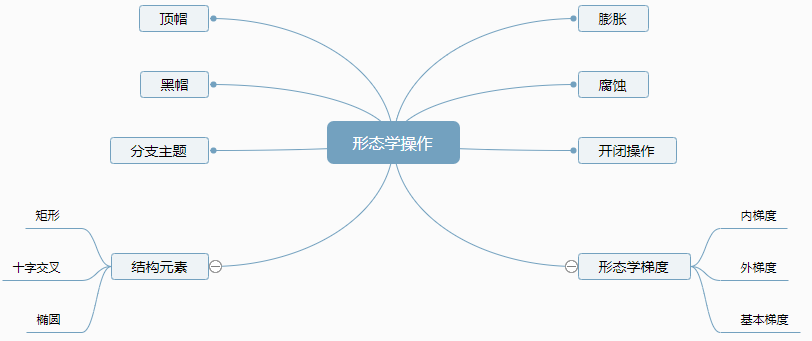

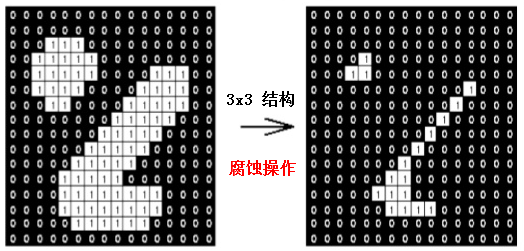

形态学是图像处理的单独分支学科

灰度与二值图像处理中重要手段

由数学的集合论等相关理论中发展起来的

在机器视觉、ORC等领域有重要作用

线型:水平、垂直

矩形:w·h

十字交叉:四邻域、八邻域

Mat kernel=getStructingElement(MORPH_RECT,Size(5 ,5 ),Point(-1 ,-1 )); erode(binary,binary,kernel); erode(src,src,kernel); dilate(src,src,kernel);

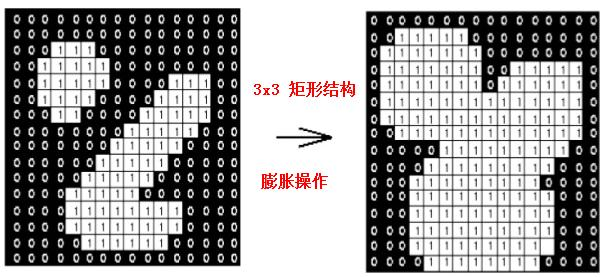

开操作=腐蚀+膨胀

意义:删除小的干扰块,可以用户验证码干扰的清除

Mat kernel=getStructingElement(MORPH_RECT,Size(5 ,5 ),Point(-1 ,-1 )); morphologyEx(binary,dst,MORPH_OPEN,kernel,Point(-1 ,-1 ),1 );

MORPH_OPEN

开操作

MORPH_CLOSE

闭操作

MORPH_GRADIENT

基本梯度

MORPH_TOPHAT

顶帽操作

MORPH_BLACKHAT

黑帽操作

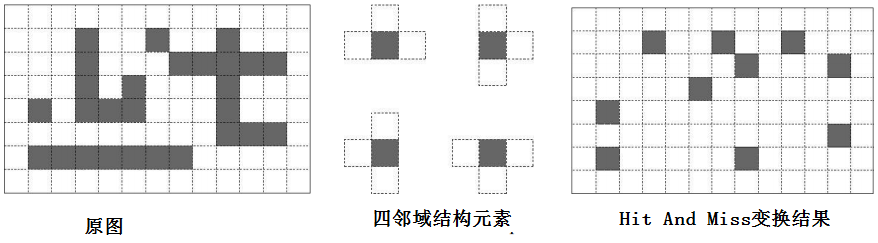

MORPH_HITMISS

击中击不中

结构元素是矩形时,闭操作之后的空洞呈方形。可以改变结构元素枚举类型:MORPH_CIRCLE

交友元素的size设置为(15,1)可以只提取横线。

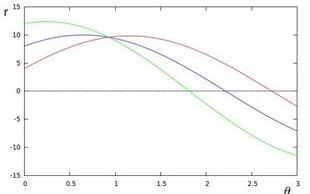

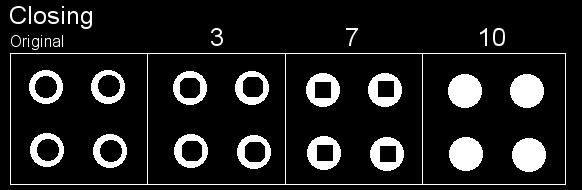

基本梯度 – 膨胀减去腐蚀之后的结果

内梯度 – 原图减去腐蚀之后的结果

外梯度 – 膨胀减去原图的结果

Mat kernel=getStructingElement(MORPH_RECT,Size(5 ,5 ),Point(-1 ,-1 )); Mat basic_grad,inter_grad,exter_grad; morphologyEx(binary,basic_grad,MORPH_GRADIENT,kernel,Point(-1 ,-1 ),1 ); Mat dst1,dst2; erode(gray,dst1,kernel); subtract(gray,dst1,inter_grad); erode(gray,dst2,kernel); subtract(dst2,gray,exter_grad); threshold(basic_grad,binary,0 ,255 ,THRESH_BINARY|THRESH_OTSH);

顶帽:是原图减去开操作之后的结果

黑帽:是闭操作之后结果减去原图

顶帽与黑帽的作用是用来提取图像中微小有用信息块

Mat kernel=getStructingElement(MORPH_RECT,Size(5 ,5 ),Point(-1 ,-1 )); morphologyEx(binary,dst,MORPH_TOPHAT,kernel,Point(-1 ,-1 ),1 );

Mat kernel=getStructingElement(MORPH_CROSS,Size(5 ,5 ),Point(-1 ,-1 )); morphologyEx(binary,dst,MORPH_HITMISS,kernel,Point(-1 ,-1 ),1 );

VideoCapture capture (0 ) ;if (!capture.isOpened())return ;namedWindow("frame" ,WINDOW_AUTOSIZE); Mat frame; while (true ){ bool ret = capture.read (frame); if (!ret)break ; imshow("frame" ); char c=waitKey(50 ); if (c==27 )break ; }

VideoCapture capture ("1.mp4" ) ;int fps = capture.get (CAP_PROP_FPS);int width = capture.get (CAP_PROP_FRAME_WIDTH);int height = capture.get (CAP_PROP_FRAME_HEIGHT);int total_frams = capture.get (CAP_PROP_FRAME_COUNT); int type=capture.get (CAP_PROP_FOURCC)

VideoCapture capture ("http://www.xxx.com/1.mp4" ) ;

Mat frame; VideoWriter writer ("./res.mp4" ,type,fps,Size(width ,height ),true ) ;while (true ){ bool ret = capture.read (frame); if (!ret)break ; writer.write (frame); imshow("frame" ); char c=waitKey(50 ); if (c==27 )break ; }

opencv对于保存的限制,文件大小最好不要超过2GB

最好是保存到一定大小后,再新建文件保存。

capture.release (); writer.release ();

Mat hsv,mask; cvtColor(frame,hsv,COLOR_BGR2HSV); inRange(hsv,Scalar(0 ,43 ,46 ),Scalar(10 ,255 ,255 ),mask); Mat se=getStructuringElement(MORPH_RECT,Size(15 ,15 )); morphologyEx(mask,mask,MORPH_OPEN,se); vector <vector <Point>>contours;vector <Vec4i>hierarchy;findContours(mask,contours,hierarchy,RET_EXTERNAL,CHAIN_APPROX_SIMPLE,Point()); int index=-1 ;double max_area=0 ;for (size_t t=0 ;t<contours.size ();t++){ double area=contourArea(contours[t]); double len=arcLength(contours[t],true ); if (max_area<area){ index=t;max_area=area; } } if (index>0 ){ RotatedRect rrt=minAreaRect(contours[index]); ellipse(frame,rrt,Scalar(255 ,0 ,0 ),2 ,8 ); circle (frame,rrt.center,4 ,Scalar(0 ,255 ,0 ),2 ,8 ,0 ); } imshow("detection" ,frame)

视频和图片的区别是视频具有连续性,每一帧相互之间有上下文的关系。合理利用前面的信息,对未来帧走势做出建模。

常用的方法

KNN

GMM

Fuzzy lntegral模糊积分

KNN、GMM对前景和背景进行像素级别的分割(某个像素只能是背景和前景),模糊积分会得到像素背景和前景的概率

GMM是基于高斯和最大似然,计算马氏距离,在一定范围内,判断当前像素是否是前景。如果大于,则会新增高斯权重模型,模型就新增一个组件,当组件满了,丢弃最开始的。这样模型就动态的更新和维护。

auto pMOG2 = createBackgroundSubtractorMOG2(500 ,16 ,false );Mat mask,bg_image; pMOG2->apply(frame,mask); pMOG2->getBackgroundImage(frame,mask);

光流可以看成是图像结构光的变化或者图像亮度模式明显的移动

光流法分为:稀疏光流KLT与稠密光流

基于相邻视频帧进行分析

光流对光照变化很敏感,一般需要进行模糊

假设条件:1、亮度恒定;2、近距离移动;3、空间一致;

亮度恒定:假设x,y像素点移动了u,v,那么两个像素值应该相等

I ( x , y , t ) = I ( x + u , y + v , t + 1 ) I(x,y,t)=I(x+u,y+v,t+1)

I ( x , y , t ) = I ( x + u , y + v , t + 1 )

空间一致:假设窗口大小为5*5个像素点,基于亮度恒定,移动u,v

O = I t ( p i ) + ∇ I ( p i ) ⋅ [ u v ] O=I_t(p_i)+\nabla I(p_i)·\begin{bmatrix}u &v\end{bmatrix}

O = I t ( p i ) + ∇ I ( p i ) ⋅ [ u v ]

根据空间一致性,求取移动的u,v,可以使用最小二乘法求d

A d = b Ad=b

A d = b

( A T A ) d = A T b (A^TA)d=A^Tb

( A T A ) d = A T b

A = [ I x ( p 1 ) I y ( p 1 ) . . . . . . I x ( p 25 ) I y ( p 25 ) ] A=\begin{bmatrix}I_x(p_1) &I_y(p_1) \\... &... \\I_x(p_{25}) &I_y(p_{25}) \end{bmatrix}

A = ⎣ ⎡ I x ( p 1 ) . . . I x ( p 2 5 ) I y ( p 1 ) . . . I y ( p 2 5 ) ⎦ ⎤

b = [ I x ( p 1 ) . . . I x ( p 25 ) ] b=\begin{bmatrix}I_x(p_1) \\... \\ I_x(p_{25}) \end{bmatrix}

b = ⎣ ⎡ I x ( p 1 ) . . . I x ( p 2 5 ) ⎦ ⎤

d = [ u v ] d=\begin{bmatrix}u \\ v \end{bmatrix}

d = [ u v ]

M = A T A = [ ∑ I x I x ∑ I x I y ∑ I x I y ∑ I y I y ] M=A^TA=\begin{bmatrix}\sum{I_xI_x} &\sum{I_xI_y} \\\sum{I_xI_y} &\sum{I_yI_y} \end{bmatrix}

M = A T A = [ ∑ I x I x ∑ I x I y ∑ I x I y ∑ I y I y ]

A T b = [ ∑ I x I t ∑ I y I t ] A^Tb=\begin{bmatrix}\sum{I_xI_t} \\ \sum{I_yI_t} \end{bmatrix} A T b = [ ∑ I x I t ∑ I y I t ]

M就是Harris角点检测的方程,也就是满足空间一致性的只有角点才可以

使用角点检测获取到移动位置距离

Mat gray,old_gray; vector <Point2f>corners;double quality_level=0.01 ;int minDistance = 5 ;goodFeaturesToTrack(gray,corners,200 ,quality_level,minDistance,Mat(),3 ,false ); vector <Point2f>pts[2 ];pts[0 ].insert(pts[0 ].end (),corners.begin (),corners.end ()); vector <unchar>status;vector <float >err;TermCriteria cireria=TermCriteria(TermCriteria::COUNT+TermCriteria::EPS,10 ,0.01 ); while (true ){ calcOpticalFlowPyrLK(old_gray,gray,pts[0 ],pts[1 ],status,err,Size(21 ,21 ),3 ,cireria,0 ); size_t i=0 ,k=0 ; for (i=0 ;i<pts[1 ].size ();i++){ if (status[i]){ pts[0 ][k]=pts[0 ][i]; pts[1 ][k++]=pts[1 ][i]; circle (frame,pts[1 ][i],2 ,Scalar(0 ,0 ,255 ),2 ,8 ,0 ); line (frame,pts[0 ][i],pts[1 ][i],Scalar(0 ,255 ,255 ),2 ,8 ,0 ); } } pts[0 ].resize(k); pts[1 ].resize(k); imshow("KLT" ,frame); std ::swap(pts[1 ],pts[0 ]); cv::swap(old_gray,gray); }

上面代码仅检测是否有效,会显示大量的点。下面增加移动距离的限制,使得跟踪效果更好一些

加上距离判断的话,有些角点就会被抛弃,需要初始化才能在下一次检测时继续做判断

Mat gray,old_gray; vector <Point2f>corners;double quality_level=0.01 ;int minDistance = 5 ;goodFeaturesToTrack(gray,corners,200 ,quality_level,minDistance,Mat(),3 ,false ); vector <Point2f>pts[2 ];vector <Point2f>initPoints;pts[0 ].insert(pts[0 ].end (),corners.begin (),corners.end ()); initPoints.insert(initPoints.end (),corners.begin (),corners.end ()); vector <unchar>status;vector <float >err;TermCriteria cireria=TermCriteria(TermCriteria::COUNT+TermCriteria::EPS,10 ,0.01 ); while (true ){ calcOpticalFlowPyrLK(old_gray,gray,pts[0 ],pts[1 ],status,err,Size(21 ,21 ),3 ,cireria,0 ); size_t i=0 ,k=0 ; for (i=0 ;i<pts[1 ].size ();i++){ double dist = abs (pts[0 ].x-pts[1 ].x)+abs (pts[0 ].y-pts[1 ].y); if (status[i] && dist>2 ){ initPoints[k]=initPoints[i]; pts[0 ][k]=pts[0 ][i]; pts[1 ][k++]=pts[1 ][i]; circle (frame,pts[1 ][i],2 ,Scalar(0 ,0 ,255 ),2 ,8 ,0 ); line (frame,pts[0 ][i],pts[1 ][i],Scalar(0 ,255 ,255 ),2 ,8 ,0 ); } } pts[0 ].resize(k); pts[1 ].resize(k); initPoints.resize(k); vector <Scalar> lut; for (size_t t=0 ;t<initPoints.size ();t++){ int b=rng.uniform(0 ,255 ),g=rng.uniform(0 ,255 ),r=rng.uniform(0 ,255 ); lut.push_back(Scalar(b,g,r)); } for (size_t t=0 ;t<initPoints.size ();t++){ line (frame,initPoints[t],pts[1 ][t],lut[t],2 ,8 ,0 ); } imshow("KLT" ,frame); std ::swap(pts[1 ],pts[0 ]); cv::swap(old_gray,gray); if (pts[0 ].size ()<40 ){ goodFeaturesToTrack(gray,corners,200 ,quality_level,minDistance,Mat(),3 ,false ); pts[0 ].insert(pts[0 ].end (),corners.begin (),corners.end ()); } }

像素点跟周围的像素点是相关性的

基于二次多项式实现光流评估算法

对每个像素点都计算移动距离

基于总位移与均值评估

数学描述,x是一个2*2的矩阵,代表(x,y)

f 1 ( x ) = x T A 1 x + b 1 T x + c 1 f_1(x)=x^TA_1x+b_1^Tx+c_1

f 1 ( x ) = x T A 1 x + b 1 T x + c 1

f 2 ( x ) = f 1 ( x − d ) = ( x − d ) T A 1 ( x − d ) + b 1 T ( x − d ) + c 1 = x T A 2 x + b 2 T x + c 2 f_2(x)=f_1(x-d)=(x-d)^TA_1(x-d)+b_1^T(x-d)+c_1=x^TA_2x+b^T_2x+c_2

f 2 ( x ) = f 1 ( x − d ) = ( x − d ) T A 1 ( x − d ) + b 1 T ( x − d ) + c 1 = x T A 2 x + b 2 T x + c 2

A 2 = A 1 A_2=A_1

A 2 = A 1

b 2 = b 1 − 2 A 1 d b_2=b_1-2A_1d

b 2 = b 1 − 2 A 1 d

c 2 = d T A 1 d − b 1 T d + c 1 c2=d^TA_1d-b^T_1d+c_1

c 2 = d T A 1 d − b 1 T d + c 1

可以看到系数A不变,b和c的变化和d相关。求取d转成了求取系数

假定ROI窗口共用相同的系数,组成多约束方程求解,用最小二乘拟合,得到系数结果求解d,全局总位移。根据求解的d,又可以反推系数,不断迭代循环,直至系数足够精准。得到光流场

笛卡尔坐标系转成极坐标系,使用HSV色彩空间进行展现出稠密光流场

Mat frame,preFrame,gray,preGray; capture.read (preFrame); cvtColor(preFrame,preGray,COLOR_BGR2GRAY); Mat hsv=Mat::zeros(preFrame.size (),preFrame.type()); Mat mag=Mat::zeros(hsv.size (),CV_32FC1); Mat ang=Mat::zeros(hsv.size (),CV_32FC1); Mat xpts=Mat::zeros(hsv.size (),CV_32FC1); Mat ypts=Mat::zeros(hsv.size (),CV_32FC1); Mat_<Point2f>flow; vector <Point2f>mv;split(hsv,mv); Mat dst; while (true ){ cvtColor(frame,gray,COLOR_BGR2GRAY); calcOpticalFlowFarneback(preGray,gray,flow,0.5 ,3 ,15 ,3 ,5 ,1.2 ,0 ); for (int row=0 ;row<flow.rows;row++){ for (int col=0 ;col<flow.cols;col++){ const Point2f &flow_xy=flow.at<Point2f>(row,col); xpts.at<float >(row,col)=flow_xy.x; ypts.at<float >(row,col)=flow_xy.y; } } cartToPolar(xpts,ypts,mag,ang); ang=ang*180 /CV_PI/2.0 ; normalize(mag,mag,0 ,255 ,NORM_MINMAX); convertScaleAbs(mag,mag); convertScaleAbs(ang,ang); mv[0 ]=ang; mv[1 ]=Scalar(255 ); mv[2 ]=mag; merge(mv,hsv); cvtColor(hsv,dst,COLOR_HSV2BRGR); imshow("dst" ,dst); imshow("frame" ,frame); }

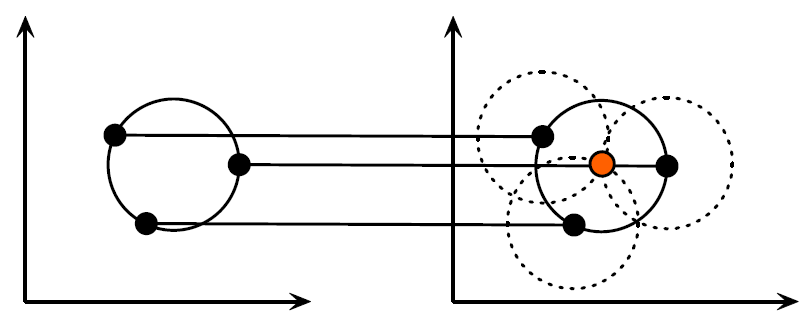

随机在空间中画圆,计算包含在圆中的点的均值x ˉ y ˉ \bar{x}\bar{y} x ˉ y ˉ

在均值迁移的基础上,每次都重新拟合椭圆,自适应变化窗口大小。

Mat frame,hsv,hue,mask,hist,backproj; capture.read (frame); int hsize=16 ;float hranges[]={0 ,180 };Rect trackWindow; bool init=true ;const float * ranges=hranges;Rect selection=selectROI("window name" ,frame,true ,false ); while (true ){ capture.read (frame); cvtColor(frame,hsv,COLOR_BGR2HSV); inRange(hsv,Scalar(26 ,43 ,46 ),Scalar(34 ,255 ,255 ),mask); int ch[]={0 ,0 }; hue.create(hsv.size (),hsv.depth()); mixChannels(&hsv,1 ,&hue,1 ,ch,1 ); if (init){ init=false ; Mat roi(hue,selection),maskroi(mask,selection); calcHist(&roi,1 ,0 ,maskroi,hist,1 ,&hsize,&ranges); normalize(hist,hist,0 ,255 ,NORM_MINMAX); trackWindow=selection; } calcBackProject(&hue,1 ,0 ,hist,backproj,&ranges); backproj &= mask; meanShift(backproj,trackWindow,TermCriteria(TermCriteria::COUNT| rectangle(frame,trackWindow,Scalar(0 ,0 ,255 ),3 ,LINE_AA); imshow("meanshift" ,frame); }

函数

说明

bitwise_and()

bitwise_not()

bitwise_or()

bitwise_xor()

compare()

divide():除

exp()

log()

max()

min()

multiply()

threshold()二值化,但要指定设定阈值

blendLinear()两幅图片的线形混合

calcHist()

createBoxFilter ()创建一个规范化的2D框过滤器

canny边缘检测

createGaussianFilter()创建一个Gaussian过滤器

createLaplacianFilter()创建一个Laplacian过滤器

createLinearFilter()创建一个线形过滤器

createMorphologyFilter()形态学运算滤波器;腐蚀、开、关等操作

createSolbelFilter()创建一个solbel过滤器

createHougnCirclesDetector()创建一个霍夫原检测器

createMedianFilter()创建一个中值滤波过滤器

createTemplateMatching()模板匹配

cvtColor()颜色空间转换

dft()执行浮点矩阵的正向或逆离散傅里叶变换

drawColorDisp()颜色差异图像

equalizeHist()将灰度图像的直方图均衡化

findMinMax()

findMinMaxLoc()

flip()翻转二维矩阵

merge()用几个单通道矩阵构成一个多通道矩阵

split()将多通道矩阵分离成多个单通道矩阵

getCudaEnabledDeviceCount():获取可用的gpu数目

getDevice()返回由cuda::setDevice或默认初始化的当前设备索引

printCudaDeviceInfo()

resetDevice()显示地销毁和清理与当前进程中当前设备相关的所有资源

setDevice()设置一个device并为当前线程初始化它;如果省略次函数的调用,则在第一次`CUDA使用时初始化默认设备

remap()对图像应用一般的几个变换

resize()调整一个图像

rotate()在原点(0,0)周围旋转一个图像,然后移动它

sum()返回矩阵元素的和

Mat img = imread(R"(1.jpg)" ),result; GpuMat image; GpuMat dst; image.upload(img); cuda::resize(image,dst,Size(0 ,0 ),2 ,2 ,INTER_CUBIC); dst.download(result); int cx = image.cols/2 ;int cy = image.rows/2 ;Mat M = getRotationMatrix2D(Point(cx,cy),45 ,1. ); cuda::warpAffine(image,dst,M,image.size()); dst.download(result);

Mat img = imread("1.jpg" ); GpuMat image,dres3x3; image.upload(img); cuda::cvtColor(image,image,COLOR_BGR2BGRA); auto filter3x3_box = cuda::createBoxFilter(image.type(),image.type(),Size(3 ,3 ),Point(-1 ,-1 ));filter3x3->apply(image,dres3x3); auto filter3x3_gaussian = cuda::createGaussianFilter(image.type(),image.type(),Size(5 ,5 ),5 );auto sobel_x_filter = cuda::createSobelFilter(image.type(),image.type(),1 ,0 ,3 );auto sobel_y_filter = cuda::createSobelFilter(image.type(),image.type(),0 ,1 ,3 );GpuMat grad_x,grad_y,grad_xy; sobel_x_filter->apply(image,grad_x); sobel_y_filter->apply(image,grad_y); cuda::addWeighted(grad_x,0.5 ,grad_y,0.5 ,0 ,grad_xy); auto sobel_x_filter = cuda::createScharrFilter(image.type(),image.type(),1 ,0 ,3 );auto sobel_y_filter = cuda::createScharrFilter(image.type(),image.type(),0 ,1 ,3 );GpuMat grad_x,grad_y,grad_xy; sobel_x_filter->apply(image,grad_x); sobel_y_filter->apply(image,grad_y); cuda::addWeighted(grad_x,0.5 ,grad_y,0.5 ,0 ,grad_xy); GpuMat gray,edges; cuda::cvtColor(image,gray,COLOR_BGRA2GRAY); auto edge_detector = cuda::createCannyEdgeDetector(50 ,150 ,3 ,true );edge_detector->detect(gray,edges); GpuMat gray,edges; cuda::cvtColor(image,gray,COLOR_BGRA2GRAY); auto laplacian_filter = cuda::createLaplacianFilter(50 ,150 ,3 ,true );laplacian_filter->apply(gray,edges); Mat res3; dres3x3.download(res3);

Mat image_host = getImage(), dst_host; imshow("input" , image_host); int64 start = getTickCount(); GpuMat image, dst; image.upload(image_host); cuda::bilateralFilter(image, dst, 0 , 100 , 14 , 4 ); cout << "gpu filter fps:" << getTickFrequency() / (getTickCount() - start) << endl ;dst.download(dst_host); imshow("gray" , dst_host); waitKey(0 ); destroyAllWindows();

在opencv4cuda中,没有了二值化和形态学操作

Mat gray_host = getImage(0 ), dst_host; GpuMat gray, binary; gray.upload(gray_host); cuda::threshold(gray, binary, 0 , 255 , THRESH_BINARY); Mat se = cv::getStructuringElement(MORPH_RECT,Size(3 ,3 )); auto morph_filter = cuda::createMorphologyFilter(MORPH_OPEN, gray.type(), se);morph_filter->apply(binary, binary); binary.download(dst_host); show("input" , gray_host); show("gray" , dst_host); waitKey(0 ); destroyAllWindows();

Mat image_host = imread("D:/images/building.png" ); imshow("input" , image_host); GpuMat src, gray, corners; Mat dst; src.upload(image_host); cuda::cvtColor(src, gray, COLOR_BGR2GRAY); auto corner_detector = cuda::createGoodFeaturesToTrackDetector(gray.type(), 1000 , 0.01 , 15 , 3 , true );corner_detector->detect(gray, corners); corners.download(dst); printf ("number of corners : %d \n" , corners.cols);for (int i = 0 ; i < corners.cols; i++) { int r = rng.uniform(0 , 255 ); int g = rng.uniform(0 , 255 ); int b = rng.uniform(0 , 255 ); Point2f pt = dst.at<Point2f>(0 , i); circle(image_host, pt, 3 , Scalar(b, g, r), 2 , 8 , 0 ); } imshow("corner detect result" , image_host); waitKey(0 );

void background_demo () VideoCapture cap; cap.open("D:/images/video/vtest.avi" ); auto mog = cuda::createBackgroundSubtractorMOG2(); Mat frame; GpuMat d_frame, d_fgmask, d_bgimg; Mat fg_mask, bgimg, fgimg; namedWindow("input" , WINDOW_AUTOSIZE); namedWindow("background" , WINDOW_AUTOSIZE); namedWindow("mask" , WINDOW_AUTOSIZE); Mat se = cv::getStructuringElement(MORPH_RECT, Size(5 , 5 )); while (true ) { int64 start = getTickCount(); bool ret = cap.read(frame); if (!ret) break ; d_frame.upload(frame); mog->apply(d_frame, d_fgmask); mog->getBackgroundImage(d_bgimg); auto morph_filter = cuda::createMorphologyFilter(MORPH_OPEN, d_fgmask.type(), se); morph_filter->apply(d_fgmask, d_fgmask); d_bgimg.download(bgimg); d_fgmask.download(fg_mask); double fps = getTickFrequency() / (getTickCount() - start); putText(frame, format("FPS: %.2f" , fps), Point(50 , 50 ), FONT_HERSHEY_SIMPLEX, 1.0 , Scalar(0 , 0 , 255 ), 2 , 8 ); imshow("input" , frame); imshow("background" , bgimg); imshow("mask" , fg_mask); char c = waitKey(1 ); if (c == 27 ) { break ; } } waitKey(0 ); return ; }

void optical_flow_demo () VideoCapture cap; cap.open("test.avi" ); auto farn = cuda::FarnebackOpticalFlow::create(); Mat f, pf; cap.read(pf); GpuMat frame, gray, preFrame, preGray; preFrame.upload(pf); cuda::cvtColor(preFrame, preGray, COLOR_BGR2GRAY); Mat hsv = Mat::zeros(preFrame.size(), preFrame.type()); GpuMat flow; vector <Mat> mv; split(hsv, mv); GpuMat gMat, gAng; Mat mag = Mat::zeros(hsv.size(), CV_32FC1); Mat ang = Mat::zeros(hsv.size(), CV_32FC1); gMat.upload(mag); gAng.upload(ang); namedWindow("input" , WINDOW_AUTOSIZE); namedWindow("optical flow demo" , WINDOW_AUTOSIZE); Mat se = cv::getStructuringElement(MORPH_RECT, Size(5 , 5 )); while (true ) { int64 start = getTickCount(); bool ret = cap.read(f); if (!ret) break ; frame.upload(f); cuda::cvtColor(frame, gray, COLOR_BGR2GRAY); farn->calc(preGray, gray, flow); vector <GpuMat> mm; cuda::split(flow, mm); cuda::cartToPolar(mm[0 ], mm[1 ], gMat, gAng); cuda::normalize(gMat, gMat, 0 , 255 , NORM_MINMAX, CV_32FC1); gMat.download(mag); gAng.download(ang); ang = ang * 180 / CV_PI / 2.0 ; convertScaleAbs(mag, mag); convertScaleAbs(ang, ang); mv[0 ] = ang; mv[1 ] = Scalar(255 ); mv[2 ] = mag; merge(mv, hsv); Mat bgr; cv::cvtColor(hsv, bgr, COLOR_HSV2BGR); double fps = getTickFrequency() / (getTickCount() - start); putText(f, format("FPS: %.2f" , fps), Point(50 , 50 ), FONT_HERSHEY_SIMPLEX, 1.0 , Scalar(0 , 0 , 255 ), 2 , 8 ); gray.copyTo(preGray); imshow("input" , f); imshow("optical flow demo" , bgr); char c = waitKey(1 ); if (c == 27 ) { break ; } } waitKey(0 ); return ; }

Mat h_object_image = imread("D:/images/box.png" , 0 ); Mat h_scene_image = imread("D:/images/box_in_scene.png" , 0 ); cuda::GpuMat d_object_image; cuda::GpuMat d_scene_image; vector < KeyPoint > h_keypoints_scene, h_keypoints_object; cuda::GpuMat d_descriptors_scene, d_descriptors_object; d_object_image.upload(h_object_image); d_scene_image.upload(h_scene_image); auto orb = cuda::ORB::create();orb->detectAndCompute(d_object_image, cuda::GpuMat(), h_keypoints_object, d_descriptors_object); orb->detectAndCompute(d_scene_image, cuda::GpuMat(), h_keypoints_scene, d_descriptors_scene); Ptr< cuda::DescriptorMatcher > matcher = cuda::DescriptorMatcher::createBFMatcher(NORM_HAMMING); vector < vector < DMatch> > d_matches;matcher->knnMatch(d_descriptors_object, d_descriptors_scene, d_matches, 2 ); std ::cout << "match size:" << d_matches.size() << endl ;std ::vector < DMatch > good_matches;for (int k = 0 ; k < std ::min(h_keypoints_object.size() - 1 , d_matches.size()); k++){ if ((d_matches[k][0 ].distance < 0.9 *(d_matches[k][1 ].distance)) && ((int )d_matches[k].size() <= 2 && (int )d_matches[k].size()>0 )){ good_matches.push_back(d_matches[k][0 ]); } } std ::cout << "size:" << good_matches.size() << endl ;Mat h_image_result; drawMatches(h_object_image, h_keypoints_object, h_scene_image, h_keypoints_scene, good_matches, h_image_result, Scalar::all(-1 ), Scalar::all(-1 ), vector <char >(), DrawMatchesFlags::DEFAULT); std ::vector <Point2f> object;std ::vector <Point2f> scene;for (int i = 0 ; i < good_matches.size(); i++){ object.push_back(h_keypoints_object[good_matches[i].queryIdx].pt); scene.push_back(h_keypoints_scene[good_matches[i].trainIdx].pt); } Mat Homo = findHomography(object, scene, RANSAC); std ::vector <Point2f> corners (4 ) std ::vector <Point2f> scene_corners (4 ) corners[0 ] = Point(0 , 0 ); corners[1 ] = Point(h_object_image.cols, 0 ); corners[2 ] = Point(h_object_image.cols, h_object_image.rows); corners[3 ] = Point(0 , h_object_image.rows); perspectiveTransform(corners, scene_corners, Homo); line(h_image_result, scene_corners[0 ] + Point2f(h_object_image.cols, 0 ), scene_corners[1 ] + Point2f(h_object_image.cols, 0 ), Scalar(255 , 0 , 0 ), 4 ); line(h_image_result, scene_corners[1 ] + Point2f(h_object_image.cols, 0 ), scene_corners[2 ] + Point2f(h_object_image.cols, 0 ), Scalar(255 , 0 , 0 ), 4 ); line(h_image_result, scene_corners[2 ] + Point2f(h_object_image.cols, 0 ), scene_corners[3 ] + Point2f(h_object_image.cols, 0 ), Scalar(255 , 0 , 0 ), 4 ); line(h_image_result, scene_corners[3 ] + Point2f(h_object_image.cols, 0 ), scene_corners[0 ] + Point2f(h_object_image.cols, 0 ), Scalar(255 , 0 , 0 ), 4 ); imshow("Good Matches & Object detection" , h_image_result); waitKey(0 );

VideoCapture cap; cap.open("test.avi" ); Mat f; GpuMat frame, gray; namedWindow("input" , WINDOW_AUTOSIZE); namedWindow("People Detector Demo" , WINDOW_AUTOSIZE); auto hog = cuda::HOG::create();hog->setSVMDetector(hog->getDefaultPeopleDetector()); vector <Rect> objects;while (true ) { int64 start = getTickCount(); bool ret = cap.read(f); if (!ret) break ; imshow("input" , f); frame.upload(f); cuda::cvtColor(frame, gray, COLOR_BGR2GRAY); hog->detectMultiScale(gray, objects); for (int i = 0 ; i < objects.size(); i++) { rectangle(f, objects[i], Scalar(0 , 0 , 255 ), 2 , 8 , 0 ); } double fps = getTickFrequency() / (getTickCount() - start); putText(f, format("FPS: %.2f" , fps), Point(50 , 50 ), FONT_HERSHEY_SIMPLEX, 1.0 , Scalar(0 , 0 , 255 ), 2 , 8 ); imshow("People Detector Demo" , f); char c = cv::waitKey(1 ); if (c == 27 ) { break ; } } cv::waitKey(0 );

VideoCapture cap; cap.open("video.mp4" ); Mat frame_host, binary; GpuMat frame, hsv, mask; vector <GpuMat> mv;vector <GpuMat> thres (4 ) while (true ) { int64 start = getTickCount(); bool ret = cap.read(frame_host); if (!ret) break ; imshow("frame" , frame_host); frame.upload(frame_host); cuda::cvtColor(frame, hsv, COLOR_BGR2HSV); cuda::split(hsv, mv); cuda::threshold(mv[0 ], thres[0 ], 35 , 255 , THRESH_BINARY); cuda::threshold(mv[0 ], thres[3 ], 77 , 255 , THRESH_BINARY); cuda::threshold(mv[1 ], thres[1 ], 43 , 255 , THRESH_BINARY); cuda::threshold(mv[2 ], thres[2 ], 46 , 255 , THRESH_BINARY); cuda::bitwise_xor(thres[0 ], thres[3 ], thres[0 ]); cuda::bitwise_and(thres[1 ], thres[0 ], mask); cuda::bitwise_and(mask, thres[2 ], mask); cuda::threshold(mask, mask, 66 , 255 , THRESH_BINARY); Mat se = cv::getStructuringElement(MORPH_RECT, Size(7 , 7 )); auto morph_filter = cuda::createMorphologyFilter(MORPH_OPEN, mask.type(), se); morph_filter->apply(mask, mask); mask.download(binary); imshow("mask" , binary); Mat labels = Mat::zeros(binary.size(), CV_32S); Mat stats, centroids; int num_labels = connectedComponentsWithStats(binary, labels, stats, centroids, 8 , 4 ); for (int i = 1 ; i < num_labels; i++) { int cx = centroids.at<double >(i, 0 ); int cy = centroids.at<double >(i, 1 ); int x = stats.at<int >(i, CC_STAT_LEFT); int y = stats.at<int >(i, CC_STAT_TOP); int width = stats.at<int >(i, CC_STAT_WIDTH); int height = stats.at<int >(i, CC_STAT_HEIGHT); if (width < 50 || height < 50 ) { continue ; } circle(frame_host, Point(cx, cy), 2 , Scalar(255 , 0 , 0 ), 2 , 8 , 0 ); Rect rect (x, y, width, height) ; rectangle(frame_host, rect, Scalar(0 , 0 , 255 ), 2 , 8 , 0 ); } double fps = getTickFrequency() / (getTickCount() - start); putText(frame_host, format("FPS: %.2f" , fps), Point(50 , 50 ), FONT_HERSHEY_SIMPLEX, 1.0 , Scalar(0 , 0 , 255 ), 2 , 8 ); imshow("color object tracking" , frame_host); if (fps > 80 ) { break ; } char c = waitKey(1 ); if (c == 27 ) { break ; } }

函数

说明

import cv2引入

img=cv2.imread(imagename)读入图像

cap=cv2.VideoCapture(videoname)读入视频

cap.isOpened()判断是否打开

ret,frame=cap.read()ret读取帧是否成功,frame当前帧,返回为空则说明读取结束

cv2.cvtColor(img,cv2.COLOR_BGR2RGB)色彩空间转换

cv2.imshow(title,img);cv2.waitKey(0);cv2.destroyAllWindows();显示图像

k = cv2.waitKey(5) & 0xFF键盘输入

img_roi=img[:200,:200]兴趣域

b,g,r=cv2.split(img)图像通道划分

img=cv2.merge(b,g,r)通道组合

cv2.copyMakeBorder(img,top,bottom,left,right,boarderType边界填充,包括

cv2.add(img1,img2)两幅图像add操作,超出255则取255

cv2.resize(img,(w,h)[,fx,fy])更改尺寸,不指定size,也可以通过fxfy倍数关系调整大小,INTER_CUBIC双立方插值

cv2.addWeighted(img1,alpha,img2,beta,b带权重相加

mask=np.zeros(img.shape[:2],np.uint8);mask[100:200,200:300]=255;cv2.bitwise_and(img,img,mask=mask)掩码操作

img_group=np.hstack((img1,img2,img3))图像水平拼接

img_group=np.vstack((img1,img2,img3))图像垂直拼接

函数

说明

ret,dst=cv2.threshold(src,thresh,maxbal,type)图像阈值

blur=cv2.blur(img,(3,3))均值滤波

box=cv2.boxFilter(img,-1,(3,3),normalize=True方框滤波,不做归一化会越界

gussian=cv2.GussianBlur(img,(3,3),1)高斯滤波

median=cv2.medianBlur(img,3)中值滤波

cv2.filter2D()2D滤波器

bilateralFilter ()双边滤波

xmingproc.jointBilateralFilter()联合双边滤波

ximgproc.guidedFilter ()导向滤波

函数

说明

erosion=cv2.erode(img,kernel=np.ones((5,5),np.uint8),iterations=1腐蚀,iterations迭代次数

dilate=cv2.dilate(img,kernel,iterations膨胀

opening=cv2.morphologyEx(img,cv2.MORPH_OPEN,kernel开运算:先腐蚀后膨胀

closing=cv2.morphologyEx(img,cv2.MORPH_CLOSE,kernel闭运算:先膨胀后腐蚀

gradient=cv2.morphologyEx(img,cv2.MORPH_GRADIENT,kernel梯度运算:膨胀-腐蚀

tophat=cv2.morphologyEx(img,cv2.MORPH_TOPHAT,kernel礼帽操作:原始输入-开运算,将明亮部分突出出来

blackhat=cv2.morphologyEx(img,cv2.MORPH_BLACKHAT,kernel黑帽操作:闭运算-原始输入

函数

说明

dst=cv2.Sobel(src,ddepth,dx,dy,ksize)Sobel算子,CV_64F有负数时图像被截断,需要取绝对值dst_x=cv2.Sobel(img,cv2.CV_64F,1,0,ksize=3);dst_x=cv2.convertScaleAbs(dst_x);dst=cv2.addWeighted(dst_x,0.5,dst_y,0.5,0),xy分开计算效果更好

dst=cv2.Scharr(src,ddepth,dx,dy,ksize)Scharr算子

dst=cv2.Laplacian(src,ddepth,ksize)laplacian算子

laplacian = cv2.Laplacian(frame, cv2.CV_64F)边缘检测算子-拉普拉斯

sobelx = cv2.Sobel(frame,cv2.CV_64F, 1, 0, ksize=5)边缘检测算子-索贝尔(X)

sobely = cv2.Sobel(frame,cv2.CV_64F, 0, 1, ksize=5)边缘检测算子-索贝尔(Y)

edges = cv2.Canny(frame, 100, 200)目标检测-canny

gradX = cv2.Sobel(tophat, ddepth=cv2.CV_32F, dx=1 , dy=0 ,ksize=-1 ) gradX = np.absolute(gradX) (minVal, maxVal) = (np.min(gradX), np.max(gradX)) gradX = (255 * ((gradX - minVal) / (maxVal - minVal))) gradX = gradX.astype("uint8" ) plt.imshow(gradX)

函数

说明

dst=cv2.Canny(img,minval,maxval)canny

函数

说明

up=cv2.pyrUp(img);down=cv2.pyrDown(img);金字塔

``

拉普拉斯金字塔:src-pyrUp(pyrDown(src))

函数

说明

cv2.findContours(img_binary,mode,method)mode:

cv2.drawContours(img_cpy,contours,-1,(0,0,255),2)绘制轮廓,-1表示所有轮廓都绘制,scalar颜色,2线宽

area=cv2.contourArea(contours[0])轮廓面积

cv2.arcLength(contours[0],True)周长,True表示闭合

approx=cv2.approxPolyDP(contours[0],epsilon=0.1,True)轮廓多边形近似,得到多个顶点

x,y,w,h=cv2.boundingRect(contours[0])外接矩形

(x,y),radius=cv2.minEnclosingCircle(contours[0])外接圆

import cv2img = cv2.imread("/data/pic/1.png" ) gray = cv2.cvtColor(img,cv2.COLOR_BGR2GRAY) ret,thresh = cv2.threshold(gray,127 ,255 ,cv2.THRESH_BINARY) binary,contours,hierarchy = cv2.findCOntours(thresh,cv2.RETR_TREE,cv2.CHAIN_APPROX_NONE) img_cpy = img.copy() res = cv2.drawContours(img_cpy,contours,-1 ,(0 ,0 ,255 ),2 ) cv2.imshow("title" ,res) cv2.waitKey(0 ) cv2.destroyAllWindows()

算法

说明

TM_SQDIFF平方距离,值越小越相似

TM_SQDIFF_NORMED归一化

TM_CCORR相关性,值越大越相似

TM_CCORR_NORMED归一化

TM_CCOEFF相关系数,值越大越相似

TM_CCOEFF_NORMED归一化

函数

说明

res=cv2.matchTemplate(img,template,cv2.TM_SQDIFF)img:AxB,template:axb,输出(A-a+1)x(B-a+1)

minval,maxval,minloc,maxloc=cv2.minMaxLoc(res)获取最小最大值及区域

top_left=minloc if method in [cv2.TM_SQDIFF,cv2.SQDIFF_NORMED] else maxloc;bottom_right=(top_left[0]+template.shape[0],top_left[1]+template.shape[1]);cv2.rectangle(img,top_left,bottom_right,255,2绘制区域

img = cv2.imread("1.jpg" ) img_gray = cv2.cvtColor(img,cv2.COLOR_BGR2RGB) template = cv2.imread("2.jpg" ,0 ) h,w = template.shape[:2 ] res = cv2.matchTemplate(img_gray,template,cv2.TM_CCOEFF_NORMED) threshold=0.8 loc = np.where(res>=threshold) for p in zip(*loc[::-1 ]): bottom_right=(p[0 ]+w,p[1 ]+h) cv2.rectangle(img,p,bottom_right,(0 ,0 ,255 ),2 ) cv2.imshow("title" ,img) cv2.waitKey(0 ) cv2.destroyAllWindows()

函数

说明

hist=cv2.calcHist(img,channels,mask,histSize,ranges)直方图:hist=cv2.calcHist([img],[0],None,[256],[0,256])

eq=cv2.equalizeHist(img)直方图均值化

clahe=cv2.createCLAHE(clipLimit=2.0,tileGridSize=(8,8));img_clahe=clahe.apply(img)自适应直方图均衡化

img = cv2.imread("1.jpg" ) color = ['b' ,'g' ,'r' ] for i,col in enumerate(color): hist = cv2.calcHist([img],[i],None ,[256 ],[0 ,256 ]) plt.plot(hist,color=col) plt.xlim([0 ,256 ])

傅里叶:任何周期信号,都可以用正弦函数表达。建立复频域,表示这种变换,介绍

滤波:高通、低通滤波

函数

说明

img32=np.float32(img)输入图像需要先转换成np.float32格式

dft=cv2.dft(img32,flags=cv2.DFT_COMPLEX_OUTPUT)傅里叶变换

cv2.idft()傅里叶逆变换

dft_shift=np.fft.fftshift(dft)傅里叶得到的结果,频率为0会在左上角,为了图像显示方便,通常要转换到中心位置

dft_ishift=np.fft.ifftshift(dft_shift)复原dft

magnitude_spectrum=20*np.log(cv2.magnitude(dft_shift[:,:,0],dft_shift[:,:,1]))dft返回结果是双通道的(实部、虚部),需要转换成图像格式才能显示

img=cv2.imread('1.jpg' ,0 ) img32=np.float32(img) f=np.fft.fft2(img) fshift=np.fft.fftshift(f) magnitude_spectrum=20 *np.log(np.abs(fshift)) rows,cols=img.shape crows,ccols=np.int8(rows/2 ),np.int8(cols/2 ) fshift[crows-50 :crows+50 ,ccols-40 :ccols+40 ]=0 f_ishift=np.fft.ifftshift(fshift) f_idft=np.fft.ifft2(f_ishift) image_back=np.abs(f_idft) dft=cv2.dft(img32,flags=cv2.DFT_COMPLEX_OUTPUT) dft_shift=np.fft.fftshift(dft) magnitude_spectrum=20 *np.log(cv2.magnitude(dft_shift[:,:,0 ],dft_shift[:,:,1 ])) plt.subplot(121 ),plt.imshow(img,cmp='gray' ) plt.title('src' ),plt.xticks([]),plt.yticks([]) plt.subplot(122 ),plt.imshow(magnitude_spectrum,cmp='gray' ) plt.show() mask=np.zeros((rows,cols,2 ),np.uint8) mask[crows-50 :crows+50 ,ccols-40 :ccols+40 ]=1 fshift=fshift*mask f_ishift=np.fft.ifftshift(fshift) f_idft=cv2.idft(f_ishift) image_back=cv2.magnitude(f_idft[:,:,0 ],f_idft[:,:,1 ]) nrows=cv2.getOptimalDFTSize(rows) ncols=cv2.getOptimalDFTSize(cols) nimg=np.zeros((nrows,ncols),np.uint8) nimg[:rows,:cols]=img mean_filter=np.ones((3 ,3 )) x=cv2.getGaussianKernel(5 ,10 ) gaussian=x*x.T scharr=np.array([[-3 ,0 ,3 ], [-10 ,0 ,10 ], [-3 ,0 ,3 ]]) sobel_x=np.array([[-1 ,0 ,1 ], [-2 ,0 ,2 ], [-1 ,0 ,1 ]]) sobel_y=np.array([[-1 ,-2 ,-1 ], [0 ,0 ,0 ], [1 ,2 ,1 ]]) laplacian=np.array([[0 ,1 ,0 ], [0 ,-4 ,0 ], [0 ,1 ,0 ]]) filters=[mean_filter,gaussian,scharr,sobel_x,sobel_y,laplacian] filter_name=['mean_filter' ,'gaussian' ,'scharr' ,'sobel_x' ,'sobel_y' ,'laplacian' ] fft_filters=[np.fft.fft2(x,(16 ,16 )) for x in filters] fftshift=[np.fft.fftshift(x) for x in fft_filters] mag_spectrum=[np.log(np.abs(x)+1 ) for x in fftshift] for i in range(6 ): plt.subplot(2 ,3 ,i+1 ),plt.imshow(mag_spectrum[i],'gray' ) plt.title(filter_name[i]),plt.xticks([]),plt.yticks([]) plt.show

函数

说明

cv2.cornerHarris(img,blockSize,ksize,k)harrisdst=cv2.cornerHarris(gray,2,3,0.04);img[dst>0.01*dst.max()]=[0,0,255];plt.imshow(img)

``

sift

img = cv2.imread('1.jpg' ) gray = cv2.cvtColor(img,cv2.COLOR_BGR2GRAY) sift = cv2.SIFT_create() kp = sift.detect(gray,None ) img = cv2.drawKeypoints(gray,kp,img) keypoints,keypoints_desc = sift.compute(gray,kp) plt.imshow(img)

函数

说明

bf=cv2.BFMatcher(crossCheck=True)

创建暴力匹配对象

bf=cv2.FlannBasedMatcher()

使用flann库,匹配速度更快

matches=bf.match(des1,des2)

1对1匹配

matches=bf.knnMatch(des1,des2,k=2)

k近邻匹配

img1 = cv2.imread('1.jpg' ,0 ) img2 = cv2.imread('2.jpg' ,0 ) sift = cv2.SIFT_create() kp1,des1 = sift.detectAndCompute(img1,None ) kp2,des2 = sift.detectAndCompute(img2,None ) bf = cv2.BFMatcher(crossCheck=True ) matches = bf.match(des1,des2) matches = sorted(matches,key=lambda x:x.distance) img3 = cv2.drawMatches(img1,kp1,img2,kp2,matches[:10 ],None ,flags=2 ) plt.imshow(img3)

简单,但是用的不是非常广

D n ( x , y ) = ∥ f n ( x , y ) − f n − 1 ( x , y ) ∥ D_n(x,y)=\|f_n(x,y)-f_{n-1}(x,y)\| D n ( x , y ) = ∥ f n ( x , y ) − f n − 1 ( x , y ) ∥ R n ( x , y ) = { 255 D n ( x , y ) > T 0 e l s e R_n(x,y)=\begin{cases}255&D_n(x,y)>T\\0&else\end{cases} R n ( x , y ) = { 2 5 5 0 D n ( x , y ) > T e l s e

缺点

在进行前置检测前,先对背景进行驯良,对图像每个背景采用一个混合高斯模型进行模拟,每个背景混合高斯模型个数可以自适应。然后在测试阶段,对新来的像素进行GMM匹配,如果该像素能够匹配其中一个高斯,则认为是背景,否则为前景。由于整个过程GMM模型在不断更新学习中,所以在动态背景有一定鲁棒性。

import numpy as npimport cv2cap = cv2.VideoCapture('test.avi' ) kernel = cv2.getStructuringElement(cv2.MORPH_ELLIPSE,(3 ,3 )) fgbg = cv2.createBackgroundSubtractorMOG2() while (True ): ret, frame = cap.read() if not ret: break fgmask = fgbg.apply(frame) fgmask = cv2.morphologyEx(fgmask, cv2.MORPH_OPEN, kernel) im, contours, hierarchy = cv2.findContours(fgmask, cv2.RETR_EXTERNAL, cv2.CHAIN_APPROX_SIMPLE) for c in contours: perimeter = cv2.arcLength(c,True ) if perimeter > 188 : x,y,w,h = cv2.boundingRect(c) cv2.rectangle(frame,(x,y),(x+w,y+h),(0 ,255 ,0 ),2 ) cv2.imshow('frame' ,frame) cv2.imshow('fgmask' , fgmask) k = cv2.waitKey(150 ) & 0xff if k == 27 : break cap.release() cv2.destroyAllWindows()

光流是空间运动物体在观测成像平面上的像素运动的“瞬时速度”,根据各个像素点的速度矢量特征,可以对图像进行动态分析,例如目标跟踪。

**亮度恒定:**同一点随着时间的变化,其亮度不会发生改变。

**小运动:**随着时间的变化不会引起位置的剧烈变化,只有小运动情况下才能用前后帧之间单位位置变化引起的灰度变化去近似灰度对位置的偏导数。

**空间一致:**一个场景上邻近的点投影到图像上也是邻近点,且邻近点速度一致。因为光流法基本方程约束只有一个,而要求x,y方向的速度,有两个未知变量。所以需要连立n多个方程求解。

函数

说明

cv2.calcOpticalFlowPyrLK()

参数:

import numpy as npimport cv2cap = cv2.VideoCapture('test.avi' ) feature_params = dict( maxCorners=100 ,qualityLevel=0.3 ,minDistance=7 ) lk_params = dict(winSize=(15 ,15 ),maxLevel=2 ) color = np.random.randint(0 ,255 ,(100 ,3 )) ret, old_frame = cap.read() old_gray = cv2.cvtColor(old_frame, cv2.COLOR_BGR2GRAY) p0 = cv2.goodFeaturesToTrack(old_gray, mask = None , **feature_params) mask = np.zeros_like(old_frame) while (True ): ret,frame = cap.read() if not ret: break frame_gray = cv2.cvtColor(frame, cv2.COLOR_BGR2GRAY) p1, st, err = cv2.calcOpticalFlowPyrLK(old_gray, frame_gray, p0, None , **lk_params) good_new = p1[st==1 ] good_old = p0[st==1 ] for i,(new,old) in enumerate(zip(good_new,good_old)): a,b = new.ravel() c,d = old.ravel() mask = cv2.line(mask, (a,b),(c,d), color[i].tolist(), 2 ) frame = cv2.circle(frame,(a,b),5 ,color[i].tolist(),-1 ) img = cv2.add(frame,mask) cv2.imshow('frame' ,img) k = cv2.waitKey(150 ) & 0xff if k == 27 : break old_gray = frame_gray.copy() p0 = good_new.reshape(-1 ,1 ,2 ) cv2.destroyAllWindows() cap.release()

import utils_pathsimport numpy as npimport cv2rows = open("synset_words.txt" ).read().strip().split("\n" ) classes = [r[r.find(" " ) + 1 :].split("," )[0 ] for r in rows] net = cv2.dnn.readNetFromCaffe("bvlc_googlenet.prototxt" ,"bvlc_googlenet.caffemodel" ) imagePaths = sorted(list(utils_paths.list_images("images/" ))) image = cv2.imread(imagePaths[0 ]) resized = cv2.resize(image, (224 , 224 )) blob = cv2.dnn.blobFromImage(resized, 1 , (224 , 224 ), (104 , 117 , 123 )) print("First Blob: {}" .format(blob.shape)) net.setInput(blob) preds = net.forward() idx = np.argsort(preds[0 ])[::-1 ][0 ] text = "Label: {}, {:.2f}%" .format(classes[idx],preds[0 ][idx] * 100 ) cv2.putText(image, text, (5 , 25 ), cv2.FONT_HERSHEY_SIMPLEX,0.7 , (0 , 0 , 255 ), 2 ) cv2.imshow("Image" , image) cv2.waitKey(0 ) images = [] for p in imagePaths[1 :]: image = cv2.imread(p) image = cv2.resize(image, (224 , 224 )) images.append(image) blob = cv2.dnn.blobFromImages(images, 1 , (224 , 224 ), (104 , 117 , 123 )) print("Second Blob: {}" .format(blob.shape)) net.setInput(blob) preds = net.forward() for (i, p) in enumerate(imagePaths[1 :]): image = cv2.imread(p) idx = np.argsort(preds[i])[::-1 ][0 ] text = "Label: {}, {:.2f}%" .format(classes[idx],preds[i][idx] * 100 ) cv2.putText(image, text, (5 , 25 ), cv2.FONT_HERSHEY_SIMPLEX,0.7 , (0 , 0 , 255 ), 2 ) cv2.imshow("Image" , image) cv2.waitKey(0 )

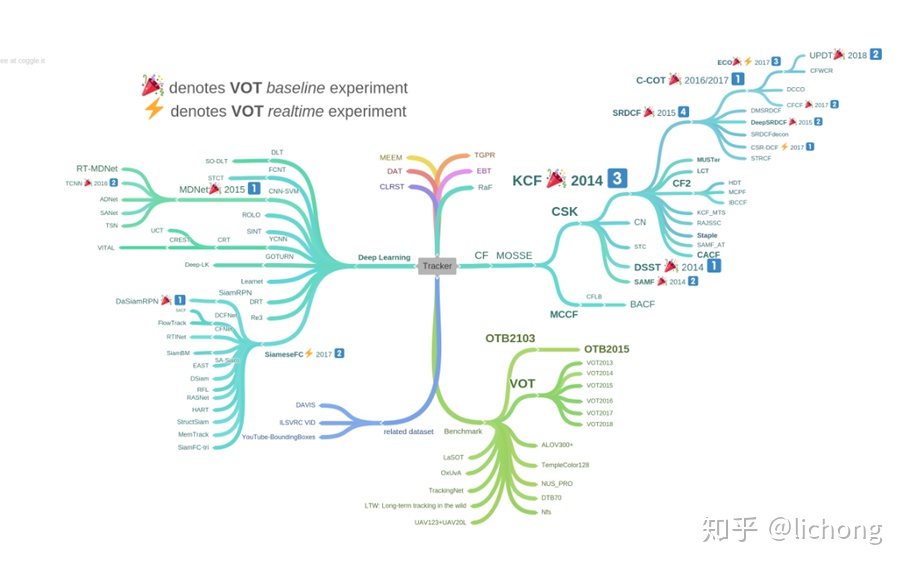

KCF 全称为Kernel Correlation Filter 核相关滤波算法。是在2014年由Joao F. Henriques, Rui Caseiro, Pedro Martins, and Jorge Batista提出来的KCF系列算法:KCF、DCF、MOSSE,其中MOSSE是目标跟踪领域的第一篇相关滤波类方法,真正第一次显示了相关滤波的潜力,只是由于选取特征过于简单,效果并不是最好的

在OTB50上的实验结果,Precision和FPS碾压了OTB50上最好的Struck

从此目标跟踪就只有两大方向,一个是实时性的相关滤波方向,另一个当然是随主流的深度学习方向了,但目前在速度方面,还是相关滤波碾压一切算法,是当前工业界目标跟踪领域使用的主要算法框架

import argparseimport timeimport cv2import numpy as npap = argparse.ArgumentParser() ap.add_argument("-v" , "--video" , type=str,help="path to input video file" ) ap.add_argument("-t" , "--tracker" , type=str, default="kcf" ,help="OpenCV object tracker type" ) args = vars(ap.parse_args()) OPENCV_OBJECT_TRACKERS = { "csrt" : cv2.TrackerCSRT_create, "kcf" : cv2.TrackerKCF_create, "boosting" : cv2.TrackerBoosting_create, "mil" : cv2.TrackerMIL_create, "tld" : cv2.TrackerTLD_create, "medianflow" : cv2.TrackerMedianFlow_create, "mosse" : cv2.TrackerMOSSE_create } trackers = cv2.MultiTracker_create() vs = cv2.VideoCapture(args["video" ]) while True : ret, frame = vs.read() if not ret: break (h, w) = frame.shape[:2 ] width=600 r = width / float(w) dim = (width, int(h * r)) frame = cv2.resize(frame, dim, interpolation=cv2.INTER_AREA) (success, boxes) = trackers.update(frame) for box in boxes: (x, y, w, h) = [int(v) for v in box] cv2.rectangle(frame, (x, y), (x + w, y + h), (0 , 255 , 0 ), 2 ) cv2.imshow("Frame" , frame) key = cv2.waitKey(100 ) & 0xFF if key == ord("s" ): box = cv2.selectROI("Frame" , frame, fromCenter=False , showCrosshair=True ) tracker = OPENCV_OBJECT_TRACKERS[args["tracker" ]]() trackers.add(tracker, frame, box) elif key == 27 : break vs.release() cv2.destroyAllWindows()