PyTorch

简介

介绍

安装

- 在官网选择自己的环境安装

conda install pytorch torchvision cudatoolkit=10.1 -c pytorch

变量

数据类型

| cpu类型 | gpu类型 | 说明 |

|---|---|---|

| torch.ShortTensor | torch.cuda.ShortTensor | 16-bit |

| torch.IntTensor | torch.cuda.IntTensor | 32-bit |

| torch.LongTensor | torch.cuda.LongTensor | 64-bit |

| torch.FloatTensor | torch.cuda.FloatTensor | 32-bit |

| torch.DoubleTensor | torch.cuda.DoubleTensor | 64-bit |

| torch.ByteTensor | torch.cuda.ByteTensor | 8-bit |

| torch.CharTensor | torch.cuda.CharTensor | 8-bit |

| torch.MalfTensor | torch.cuda.MalfTensor | 16-bit |

方法

| 函数 | 说明 |

|---|---|

| 类型推断 | a.type() |

| 类型判断 | isinstance(a,torch.FloatTensor) |

| gpu转化 | data=data.cuda() |

| 张量形状 | a.shape |

| 张量尺寸 | a.size() |

| 内存 | a.numel() |

| 维度 | a.dim() |

标量

| 函数 | 说明 |

|---|---|

| 创建标量 | a = torch.tensor(1.) |

向量/张量

| 创建矩阵 | 说明 |

|---|---|

| list矩阵 | a = torch.tensor([1.]) |

| numpy矩阵 | torch.from_numpy(np.ones(2)) |

| 给定维度 | a = torch.FloatTensor(1) |

| list矩阵 | a = torch.FloatTensor([2,3]) |

| 全一矩阵 | torch.ones(2) |

| 全零矩阵 | torch.zeros(3) |

| 对角矩阵 | torch.eye(3) |

| 随机正态分布矩阵 | torch.randn(2,3)torch.normal(mean=torch.full([10],0),std=torch.arange(1,0,-0.1)) |

| 随机均匀[0,1]矩阵 | torch.rand(2,3) |

| 随机聚云分布 | torch.randint(1,10) |

| 给定值的矩阵 | torch.full([2,3],7) |

| 递增矩阵 | torch.arange(0,10,2) |

| 等分数列 | torch.linspace(0,10,steps=4) |

| 等指数列 | torch.logspace(0,-1,steps=4) |

| 随机打散 | torch.randperm(10) |

初始化

| 创建矩阵 | 说明 |

|---|---|

| 未初始化数据 | torch.empty(2,3)a = torch.FloatTensor(2,3),易出现nan,inf等数据 |

| 随机均匀初始化 | torch.rand(2,3) |

| 根据传入的tensor随机初始化 | torch.rand_like(a) |

| 随机种子 | torch.manual_seed(23);np.random.seed(23) |

索引和切片

| 函数 | 说明 |

|---|---|

| 索引 | a[0,0] |

| 切片 | a[1:,2:,:,::2] |

| 索引 | a.index_select(1,[1,2]) |

| 索引 | a[:,0,...] |

| 掩码索引 | mask = x.ge(0.5)torch.masked_select(a,mask) |

| 打平后根据索引取 | torch.take(a,torch.torch.tensor([0,2,0])) |

维度变换

| 函数 | 说明 |

|---|---|

| 更改维度 | view/reshape两者相同,a.view(4,28*28) |

| 减少、增加维度 | squeeze/unsqueeze,squeeze挤压维度不为1则返回原值。a.unsqueeze(0) |

| 扩展 | expand扩展数据/repeat扩展数据并复制数据,a.expand(-1,32,14,14),a.repeat(1,32,14,14)拷贝次数 |

| 转置 | transpose/t/permute,t只适应于2维,transpose即可实现转置,还可维度交换。a.transpose(1,3).contiguous().view(4,3*32*32).view(4,3,32,32)维度污染a.transpose(1,3).contiguous().view(4,3*32*32).view(4,32,32,3).transpose(1,3)a.permute(0,2,3,1) |

broadcasting自动扩展

- broadcasting是一种机制,可以维度自动扩展,以适应大小维度不同的向量的运算或者叠加

Merge Split

| 函数 | 说明 |

|---|---|

| cat | torch.cat([a,b],dim=0),不支持broadcasting时,需要除0维度外的col维度相同 |

| stack | torch.stack([a,b],dim=0),与cat不同,会在dim=0新增维度,组合ab,ab维度必须完全一致 |

| split | 根据长度拆分a.size(5,1,1),长度相同a.split(2,dim=0),长度不同a.split([1,1,3],dim=0) |

| chunk | 根据数量拆分a.chunk(2,dim=0) |

运算

基本算数

| 函数 | 说明 |

|---|---|

| 加减乘除 | add,sub,mul,divtorch.all(torch.eq(a-b,torch.sub(a,b))) |

| 矩阵乘法 | 2维:torch.mm2维或3维: torch.matmul、@ |

| 平方 | a.pow(2),a**2 |

| 根号 | a.sqrt(),a**0.5 |

| 平方根的导数 | a.rsqrt() |

| 指数 | torch.exp(a) |

| 对数 | torch.log(a),默认以e为底 |

| 近似解 | 向下取整floor向上取整 ceil四舍五入 round整数部分 trunc小数部分 frac |

| 裁剪 | a.clamp(10)小于10的变为10a.clamp(0,10)小于0的变为0,大于10取10 |

统计运算

| 函数 | 说明 |

|---|---|

| 分布 | 范数:norm(n=1),mean,sum,累乘prod |

| 最值 | max,min,argmin,argmax,参数dim,keepdim |

| 最值 | kthvalue,前几名的top-5的值topk |

| 比较 | 判断每个元素eq,判断是否相等equal,gt,>,>=,<,<=,!=,== |

条件操作

- 为了更好的利用gpu的功能,pytorch的很多操作都进行了封装,实现高度并行

| 函数 | 说明 |

|---|---|

| 条件 | torch.where(condition,x,y)condition用来表示是从x还是y取值 |

| 查表 | gather(input,dim,index,out=None) |

test = torch.rand((5,4)) |

导数和梯度

- 导数derivate

- 偏微分partial derivate、梯度gradient(偏微分的集合,是一个标量)

x = torch.ones(1);w=torch.full([1],2.);w.requires_grad_();mse=F.mse_loss(torch.ones(1),x*w) |

| 函数 | 说明 |

|---|---|

| 手动导数 | torch.autograd.grad(mse,[w]),这里需要对w配置求导,w.requires_grad_(),求导一次会对生成图进行清理,可以设置retain_graph=True多次求导 |

| 自动化 | 对mse进行反向传播mse.backword(),在w.grad中查看w的梯度 |

网络

激活函数

- 除了torch库,

from torch.nn import funtional as F也有sigmoid函数

| 函数 | 说明 |

|---|---|

| sigmoid | torch.sigmoid(a) |

| tanh | torch.tanh(a) |

| ReLU | torch.relu(a)F.leaky_reluF.seluF.softplus |

损失函数

- MSE(Mean Squared Error)均方差

- Cross Entropy Loss:可用于二分类和多分类,与softmax搭配使用

- Hinge Loss:

| 函数 | 说明 |

|---|---|

| MSE | F.mse_loss(y,pred)与torch.norm(y,pred).pow(2)相同 |

| Cross Entropy | F.cross_entropy(x,y)与pred=F.softmax(y,dim=1);pre_log=torch.log(pred);F.nil_loss(pre_log,y)相同 |

| softmax | F.softmax(y,dim=1) |

网络层

- 模型类创建过程

- 引入

import torch.nn as nn - 类继承

nn.Module - 完成init、forward函数,pytorch会自动推导反向传播的函数

- 引入

class MLP(nn.Module): |

GPU加速

device = torch.device('cuda:0') |

验证测试

- 在训练每次迭代时,统计验证集的准确率,查看模型是否过拟合

for epoch in range(epochs): |

可视化工具

tensorboardX

- 安装

pip install tensorboardX - 使用tensorboardX,主要监听的是numpy数据,使用torch是,必须

param.clone().cpu().data.numpy()转换才能完成可视化过程

from tensorboardX import SummaryWritter |

visdom from facebook

- visdom简化了转化过程,直接就可以展示torch的数据

- 安装

pip install visdom - 开启服务:

python -m visdom.server - 使用visdom

from visdom import Visdom |

优化工具

过拟合和欠拟合

- 欠拟合:即使数据增加,acc和loss都无法得到更好的结果的时候,考虑是否模型表征能力不足。可以通过小量的数据训练模型,查看是否会过拟合来判断。

- 过拟合:训练数据表现越来越好,测试数据表现变得更差。

数据划分

- 数据划分:

train,val = torch.utils.data.random_split(train_db,[50000,100o0]) - 训练数据:

train_loader = torch.utils.data.DataLoader(train,batch_size=1,shuffle=True) - 验证数据:

val_loader = torch.utils.data.DataLoader(val,batch_size=1,shuffle=False)

正则化

L2正则化

- 在pytorch只需设置

weight_decay即可实现L2正则化,代表

net=MLP() |

L1正则化

- L1正则化需要手动实现

regularization_loss=0 |

动量

-

梯度更新公式:,梯度方向减小

-

增加动量后:,,增加了系数为的动量

-

pytorch的支持,只需更改

momentum参数

optimizer = optim.SGD(model.paramters(),lr=0.01,momentum=0.78,weight_decay=0.01) |

Learning Rate Decay

- 衰减lr在大多数情况下,都可以加快模型的训练

- pytorch实现衰减:

# 方法一 |

Early Stop和Dropout

- 防止过拟合

# 增加dropout |

- 在test时,不需要进行dropout,需要设置网络为evaluation

net_dropout.train5() |

卷积神经网络

卷积层

# 类实现 |

池化层

layer=nn.MaxPool2d(2,stride=2) |

上采样

layer=F.interpolate(x,scale_factor=2,mode='nearest') |

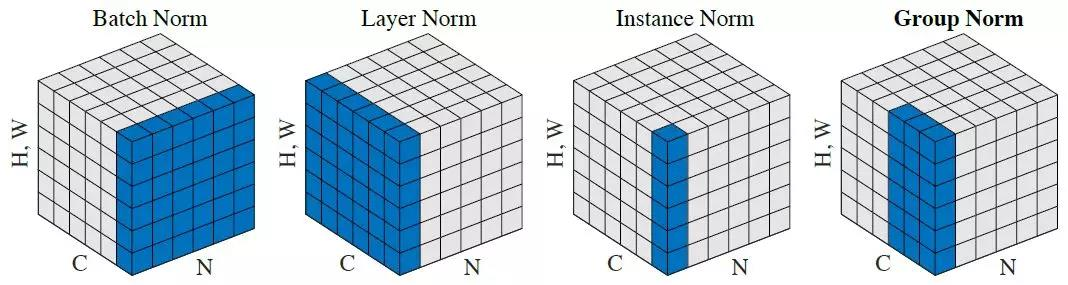

BN

- Image Normalization,图像数据一般为0~255,在训练时一般归一化

normalize = transform.Normalize(mean=[0.485,0.456,0.406],std=[0.229,0.224,0.225]) |

- Batch Normalization,在必须使用sigmoid函数时,超出0的范围越大,梯度变化越微弱,所以通过BN将数据归一化到N(0,1)的分布中,可以加速数据的收敛。

x=torch.rand(100,16,784) |

x=torch.rand(100,16,28,28) |

- 在进行测试时,无法获得,一般需要对running_mean、running_var赋值为全局的,同时将网络设定为预测模式

layer.eval() |

网络的属性

| 函数 | 说明 |

|---|---|

| 权重 | layer.weightlayer.weight.shape |

| 偏置 | layer.biaslayer.bias.shape |

nn.Module

- 是layer最基本的父类,linear、BatchNorm2d、conv2d、dropout

- 继承Module可以嵌套,使用容器Sequential()传入即可实现多个层的串联

- 参数可以有效管理,

net.parameter还可以自动命名 - 方便管理所有的层,

net.0.net,0号是自己本身 - 方便转义gpu,

net.to(device) - 加载和保存,

net.load_state_dict(torch.load('ckpt.mdl')),net.save(net.state_dict(),'ckpt.mdl') - train和test状态切换,

net.train(),net.eval() - 方便自定义类

nn.Sequential

- 容器Sequential()传入即可实现多个层的串联

nn.Parameter

- 通过Parameter包装后的变量,才可以加到

nn.parameter()中进行优化。torch.Tensor则不会被优化

self.w = nn.Parameter(torch.randn(outp,inp)) |

数据增强

- 常用的图像增强的手段:flip、rotate、scale、random move、crop、GAN、Noise

- Noise需要自己实现

transforms.Compose

- 容器

transforms.Compose可以实现多个操作的串联

train_loader = torch.utils.data.DataLoader( |

RNN

RNN

rnn = nn.RNN(input_size=100,hidden_size=10,num_layers=1) |

rnn = nn.RNNCell(input_size=100,hidden_size=10,num_layers=1) |

LSTM

rnn = nn.LSTM(input_size=100,hidden_size=10,num_layers=1) |

数据集

自定义数据集

- 继承dataset类,实现

__len__和__getitem__两个方法

class NumberDataset(Dataset): |

- 如果文件目录是按照类别存储的,可以使用内置函数,方便的转化

tf = transforms.Compose([ |

迁移网络

from torchvision.models import resnet18 |

代码总结

局部极小点获取

import matplotlib.pyplot as plt |

LR实现多分类

import torch |

Flatten

- 自定义展平功能并调用

class Flatten(nn.Module): |

Linear

- 实现线型层

class MyLinear(nn.Module): |

MLP

import torch |

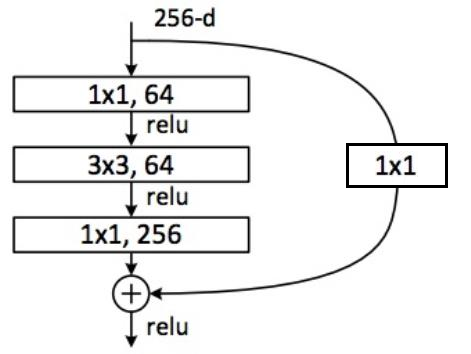

ResNet

- 基本单元的实现

class ResBlk(nn.Module): |

- 整体实现

import torch |

RNN预测sin函数

import numpy as np |